Join GitHub today

GitHub is home to over 36 million developers working together to host and review code, manage projects, and build software together.

Sign upShow sample citations & bib entries for PRs that validate #13

Comments

This comment has been minimized.

This comment has been minimized.

|

(and, as a second optional layer on top, a diff versus an appropriate comparison style could also be very useful, e.g. using something like https://github.com/kpdecker/jsdiff, which can generate output such as |

This comment has been minimized.

This comment has been minimized.

|

What would be the benefit to pulling the data from a public group vs having it in the text fixtures? |

This comment has been minimized.

This comment has been minimized.

|

This was purely pragmatic -- having them in the fixture is fine too. I was thinking of going through the Zotero API as one option, and for that purpose having them items available in a group makes getting the bib possible with a single |

This comment has been minimized.

This comment has been minimized.

|

Alright, no major difference between the two anyhow. So, the main ask here is to

(with the rendering done using citeproc-js I assume) ? |

This comment has been minimized.

This comment has been minimized.

|

Yes, I think that's basically it. I think we'll only want to do this when the other tests pass, though. |

This comment has been minimized.

This comment has been minimized.

|

Right. I'm making some headway, but citeproc is a little sparsely documented, so I'm going through their test cases to piece things together. |

This comment has been minimized.

This comment has been minimized.

|

What is the relation between the spec tests in the |

This comment has been minimized.

This comment has been minimized.

|

Alright, I have diffs rendering, but citeproc in its various incarnations could really do with better documentation. Oy vey. I've stuck to citeproc-ruby because the output really shouldn't differ from citeproc-js and the existing tests used Ruby. |

This comment has been minimized.

This comment has been minimized.

|

Sorry, hadn't seen the question above: the test-suite tests correct citeproc behavior and has no relationship to the styles repo. Every test includes its own style. |

This comment has been minimized.

This comment has been minimized.

|

Any thoughts on what to use as the items to render? |

This comment has been minimized.

This comment has been minimized.

|

Here's a set of four that I like (recycled from the CSL editor) https://gist.github.com/adam3smith/7f0c65f116d5e17df0c23901198f42c7 |

This comment has been minimized.

This comment has been minimized.

|

It's been a while since I used Ruby -- how are |

This comment has been minimized.

This comment has been minimized.

|

Ah never mind, I can just use travis hooks. |

This comment has been minimized.

This comment has been minimized.

|

I'm still not happy with the way things look when added as a comment. I've put up a sample here (apologies for the junk PR I accidentally opened on the actual styles repo) |

This comment has been minimized.

This comment has been minimized.

|

What are you not happy with? You could maybe quote it as in the examples above, but I think functionally this is very nice already |

This comment has been minimized.

This comment has been minimized.

|

I've added a 2nd sample that quotes it. It still looks a little crowded to me, but if it's good enough for you guys, that's what counts. I'm still playing with diffy to output decent looking diffs, but GH comments are pretty narrow and it gets unreadable fast. Differ does inline colored diffs which are easier to read, but the library hasn't seen updates in 8 years, and issues on the repo don't get picked up. I'm hesitant to bake in a tech debt, OTOH, it does work. |

This comment has been minimized.

This comment has been minimized.

|

Can anyone point me to a style that changed in a way that the sample references might show a difference in rendering? The random samples I looked at did stuff like et-al fixes that wouldn't trigger on the number of authors in the samples, or for other reasons showed no differences. |

This comment has been minimized.

This comment has been minimized.

|

Here are two that would show up in diffs, though I think only for the journal article in both cases: |

This comment has been minimized.

This comment has been minimized.

|

I like the quoted look -- once we add some surrounding text, I think that'll be great. |

This comment has been minimized.

This comment has been minimized.

|

Alright - you could start thinking about what you want to show when the render differs and when not. I could perhaps have the rspec tests only test the changed files in a PR, but there are two sets of styles being tested, and I don't understand the difference between them. |

This comment has been minimized.

This comment has been minimized.

|

Alright, I've added a new sample with 3 changes; one where a style changed but the output did not, one where the style changed and the output also changed, and one where a new style was added. Old or not, differ is the only one that I could find that did char-by-char coloration of diffs. Thoughts? Also thoughts on what the diff should diff? Text diffs are going to be more readable than markup diffs, but then markup changes would be lost in the report. |

This comment has been minimized.

This comment has been minimized.

|

I mean I see tests on "dependents" and "independents" but I don't know what those terms mean in this context. |

This comment has been minimized.

This comment has been minimized.

Are you familiar with dependent CSL styles at all (like https://github.com/citation-style-language/styles/blob/master/dependent/nature-biotechnology.csl)? Dependent CSL styles inherit their style format from the referenced "independent-parent", and are heavily used for large publishers like Elsevier and Springer Nature that have thousands of journals that only use a handful of distinct citation formats. They're stored in the "dependent" directory of the "styles" repo. See also https://docs.citationstyles.org/en/stable/specification.html#file-types.

jsdiff does too (although it currently can't diff markup differences). See http://incaseofstairs.com/jsdiff/. |

This comment has been minimized.

This comment has been minimized.

Especially if we envision rendering citations for every commit in a pull request, we might also want to collapse things a bit, e.g.: Changed: apa.csl

(“CSL search by example,” 2012; Hancké, Rhodes, & Thatcher, 2007) CSL search by example. (2012). Retrieved December 15, 2012, from Citation Style Editor website: http://editor.citationstyles.org/searchByExample/

(using |

This comment has been minimized.

This comment has been minimized.

Nope.

Turns out GH comments don't allow coloration. It's possible to do line-by-line coloration by using the diff format, but from my experience that's only of limited use for CSL styles, because I figure you'd generally want to know what changed in the line. But it's all we're going to get. |

This comment has been minimized.

This comment has been minimized.

|

I've put up two new samples, one which does line-by-line diffs of html, the other of markdownified html. |

This comment has been minimized.

This comment has been minimized.

|

Still working on getting the rspec failure output |

This comment has been minimized.

This comment has been minimized.

|

I like the line-by-line diffs of HTML best. HTML tags are easier to spot than markdown markup. Maybe you could tweak the summary labels a little as well? E.g.:

(@adam3smith, let me know if you think that's an improvement) |

This comment has been minimized.

This comment has been minimized.

|

And did you already put a limit on the number of styles you're rendering? It's not uncommon to have the occasional pull request that touches several hundred or even thousands of styles, so limiting the render to e.g. no more than 10 styles would be a good idea. And assuming you'll be handling dependent CSL styles as well, it might be good to:

|

This comment has been minimized.

This comment has been minimized.

|

I've put up a new sample. It turns out it is possible to do inline diffs using There's currently no limit; all changed styles are rendered. WRT dependents/independents:

I will look at your point 2. |

This comment has been minimized.

This comment has been minimized.

I don't think this really matters for us as long as there is some documentation somewhere on what file-dependencies there are.

See the three comments by @csl-bot in e.g. citation-style-language/locales#188. We'd have a welcome comment, and for each commit a success or failure comment. We'd want to insert the test failures directly again here as well, instead of just linking to the Travis CI report. It would be good if your implementation would still provide links to the Travis CI reports, by the way, for both "styles" and "locales" repositories (e.g. underneath the test with a "(see for the full Travis CI test report)". |

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

|

I've added a README to the Sheldon branch that explains the expectations it has on the styles and locales repos; all files live in |

This comment has been minimized.

This comment has been minimized.

|

I'm terribly sorry about this, but I had forgotten that in PRs, secret variables like github tokens are not set (for good reasons). This means PR-builds cannot post directly to GH. I've been talking to Travis support about this (who are super responsive!), and it looks like the best option is to mostly keep Sheldon but also keep the stuff I've done and have them cooperate. The reason now-Sheldon can't (reasonably) do everything on its own is that you really need a checked out repo at the exact point where the PR runs as well as access to the master branch and have the travis vars available -- we'd be trying to replicate the Travis build environment on Heroku (which is where shel-bot runs right?) The coop solution would be to keep shel-gem mostly as-is, but have it not post to github but either upload its output to transfer.sh or just output it to the console. shel-bot would then parse out either the transfer.sh response url or the shel-gem output from the travis log, and post that. There's upsides and downsides to each of these coop modes:

I don't currently see a way around this. I'm trying to think of a format which would look good enough to post in the log that would also be re-parsable for shel-bot. |

This comment has been minimized.

This comment has been minimized.

|

I think it makes sense to go with the transfer.sh option and see if we're having issues with stability. Given that one of the reasons for doing this is to improve the experience for style contributors, clean output would be important. |

This comment has been minimized.

This comment has been minimized.

|

I'm trying a few services - transfer.sh is really slow (10-20 seconds) and in some of my tests it simply times out when I try to post the assets. Edit: this may be a rate limit because I'm now structurally hitting this but I've also occasionally seen transfer.sh just keel over. |

This comment has been minimized.

This comment has been minimized.

|

0x0.st explicitly forbids "spamming the service with CI build assets" and file.io has both a monthly limit of 100 uploads (and it's probably enforced by IP address so from a CI service we'd be pooled in with anyone else using it) and there's some kind of rate limit going on (I sometimes get "too many requests" even from my home system). If anyone knows other services of the kind, I'd love to hear about it, but I'm currently looking for different solutions altogether. |

This comment has been minimized.

This comment has been minimized.

|

One other option would be to set up an S3 or backblaze bucket which allows anon uploads but only authenticated downloads, with an auto-expiry policy to keep the bucket size down. I use this setup for BBT logs and I've only once broken the 8ct/month limit which was when I was furiously putting out new builds in the Z5 port. Benefit of this would be that there'd need to be nothing in the logs -- shel-bot could just pick it up at an agreed name. |

This comment has been minimized.

This comment has been minimized.

|

There's one other way that just struck me -- technically possible but ho-hum from a beauty point of view. The travis log renderer honors ansi escape sequences, so I could output the shel-bot message, erase it using backspaces, which would remove it from view but it would remain in the actual log where I could parse it out. |

This comment has been minimized.

This comment has been minimized.

|

There's an ungodly amount of magic going on in rspec -- I'm getting when I run |

This comment has been minimized.

This comment has been minimized.

|

In a clean rvm setup, I'm hitting |

This comment has been minimized.

This comment has been minimized.

|

Wait, I don't need rspec -- I just need to run |

This comment has been minimized.

This comment has been minimized.

|

This is driving me nuts. What I see in the Rakefile seems to use minitest, but then the actual spec files use rspec's |

This comment has been minimized.

This comment has been minimized.

|

Alright, more clarity; the csl gems pull in newer versions of rspec it seems, and the existing test setup does not like this. No errors, but no tests ran. The quick way to solve this is to keep shel-bot and shel-gem on different branches. I do worry a little about the technological debt shel-bot is going to accumulate over time. I had tried to merge shel-bot and shel-gem (which should be possible), but realistically, that would require some gems in shel-bot to be updated, and due to the amount of magic going on in rspec, it's not easy to find out which gem is injecting what into the various namespaces. |

This comment has been minimized.

This comment has been minimized.

|

@retorquere I've not been following along, but on a quick glance |

This comment has been minimized.

This comment has been minimized.

|

My bad. I've been able to get it to work by pinning gems to the exact versions I get when the tests run on Travis. I've not been able to get the tests to run bu just having the gems all at their latest versions -- If this pinning is OK, I can proceed to merge the shel-gem part; it doesn't actually touch shel-bot (yet), just adds the gem-installable executable to the existing Sheldon so that the travis counterpart can be easily installed on |

This comment has been minimized.

This comment has been minimized.

|

I'm a bit confused by that gemspec file. Most gems don't package test/spec files at all, in my experience; the test files also don't seem to be added to That said, I'm really out of the loop here, so I may be missing the point. |

This comment has been minimized.

This comment has been minimized.

|

Ah no, the gemspec file isn't complete yet, but for the shel-bot part nothing changes; the gemspec is really only being used by gem consumers. I can run Using the gemspec is really the same as using the Gemfile from the point of view of shel-bot -- a lockfile is also generated as normal. That said, I'm no expert on gem packaging, I'm just cribbing things together best I can. Turns out The In the end, the idea is that the gem-part offers a ruby script that generates a GH-friendly summary of the test results, which the bot-part can pick up. I could just have either the gem-part on a separate branch, or even a separate repo, but given that these two would work so closely together, at least the same repo would make most sense to me. |

This comment has been minimized.

This comment has been minimized.

|

Is the part that's going to be run on Travis also run by the stateless web service on Heroku? |

This comment has been minimized.

This comment has been minimized.

|

No, but the part that's going to be ran on heroku will expect there to be something in the travis log that's put there by the script in |

This comment has been minimized.

This comment has been minimized.

|

I'm going to start moving stuff back into the Gemfile -- I had misinterpreted the gemspec stuff I found to say that everything needs to be there, which is not the case. |

This comment has been minimized.

This comment has been minimized.

|

Cool, I think that's better (I'd just leave runtime deps in the gemspec). If the Travis script does not run on Heroku, I'd also put its dependencies into a separate Group in the Gemfile which is not installed on Heroku. But in general, maybe it would be easier to use a separate repo for this (because I'm not sure that you could actually keep dependencies defined in the gemspec file out of the bundle created on Heroku). |

This comment has been minimized.

This comment has been minimized.

|

It may be possible to have the gem deps not installed on heroku; the current gemfile adds a section called |

This comment has been minimized.

This comment has been minimized.

|

(other than that it turns out I only had to pin hashdiff because newer versions of hashdiff have a namespace conflict with another gem). |

This comment has been minimized.

This comment has been minimized.

|

OK, I think I have things prepped now. Shel-bot should be unaffected other than picking up the build report from the log, and the sheldon gem now lives in a single script. I'm going to deploy it to my own heroku instance to see how it works out. |

This comment has been minimized.

This comment has been minimized.

|

Two questions:

|

This comment has been minimized.

This comment has been minimized.

Maybe you're missing a webhook (https://github.com/citation-style-language/styles/blob/0e4b1f80ed20ccf3a88febebf2c526aa734d80e1/.travis.yml#L14)? |

This comment has been minimized.

This comment has been minimized.

|

I have it figured out (and tested), the PRs are ready. |

This comment has been minimized.

This comment has been minimized.

... and waiting for your input: #15 (comment) |

This comment has been minimized.

This comment has been minimized.

|

OK, I think everything is in place now. Sheldon is running on heroku-18, and |

adam3smith commentedApr 26, 2019

•

edited by rmzelle

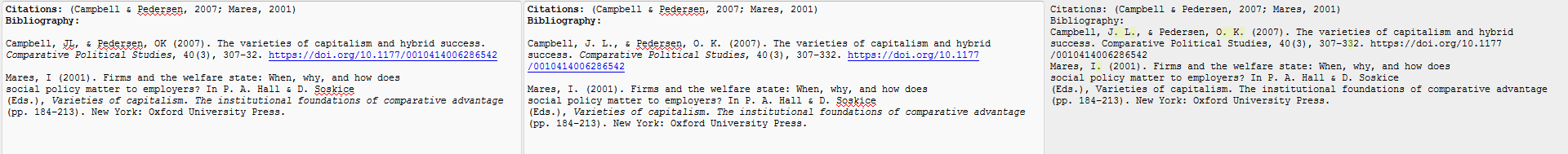

Just going to do a simple mock-up:

Currently Sheldon posts this when a PR gets opened:

Followed by this when the PR passes our tests:

I'd suggest leaving the first message as is, but would like the second one to be something like:

Where citations and bibliography are generated from the new style (I was thinking we could have the data in a public Zotero group and simply use API calls to Zotero, but agnostic about the method to be used.