Join GitHub today

GitHub is home to over 40 million developers working together to host and review code, manage projects, and build software together.

Sign up[REVIEW]: PyBIDS: Python tools for BIDS datasets #1294

Comments

whedon

assigned

cMadan

whedon

assigned

cMadan

Mar 3, 2019

whedon

added

the

review

label

whedon

added

the

review

label

Mar 3, 2019

whedon

referenced this issue

whedon

referenced this issue

Mar 3, 2019

Closed

[PRE REVIEW]: PyBIDS: Python tools for BIDS datasets #1289

This comment has been minimized.

This comment has been minimized.

|

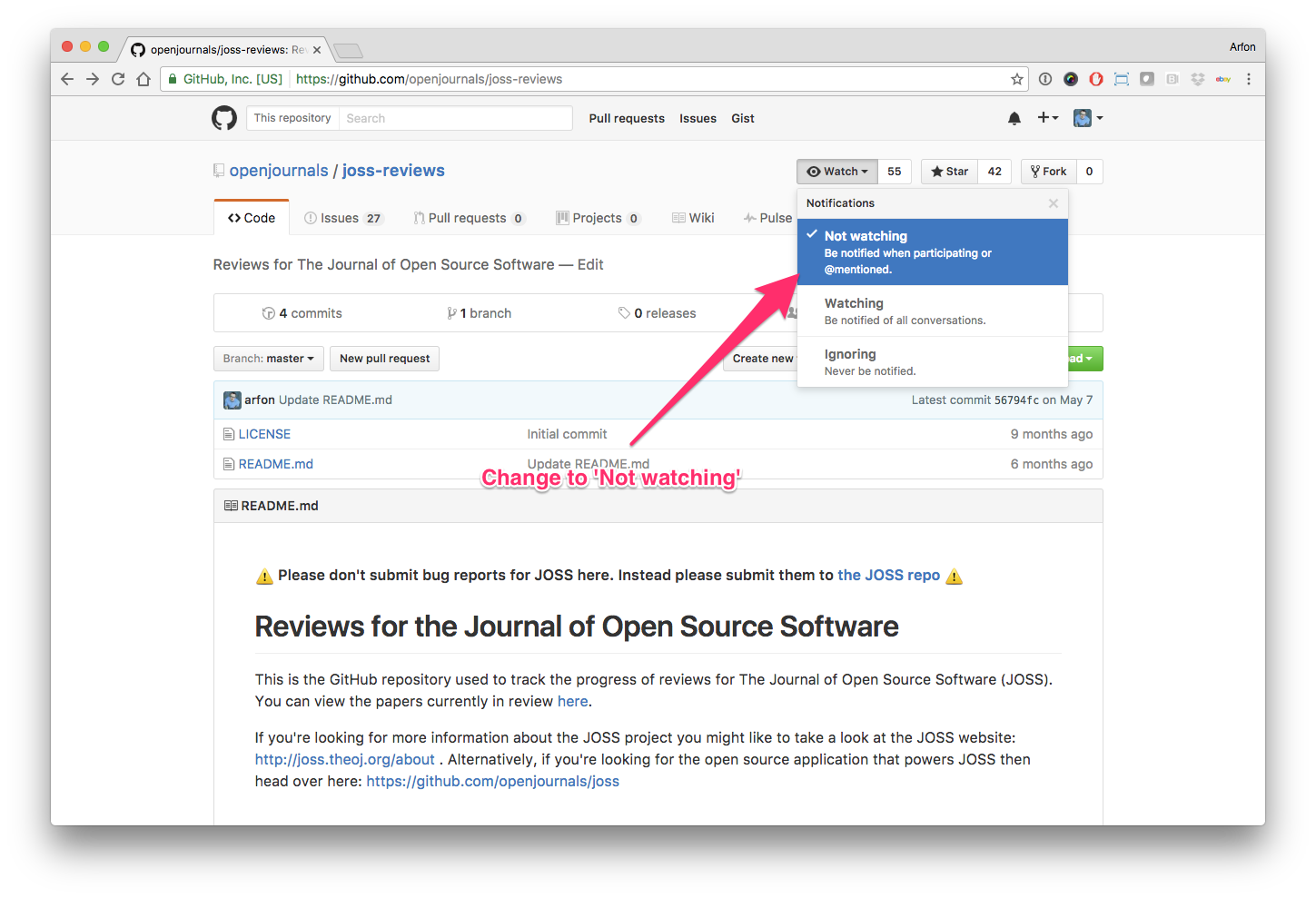

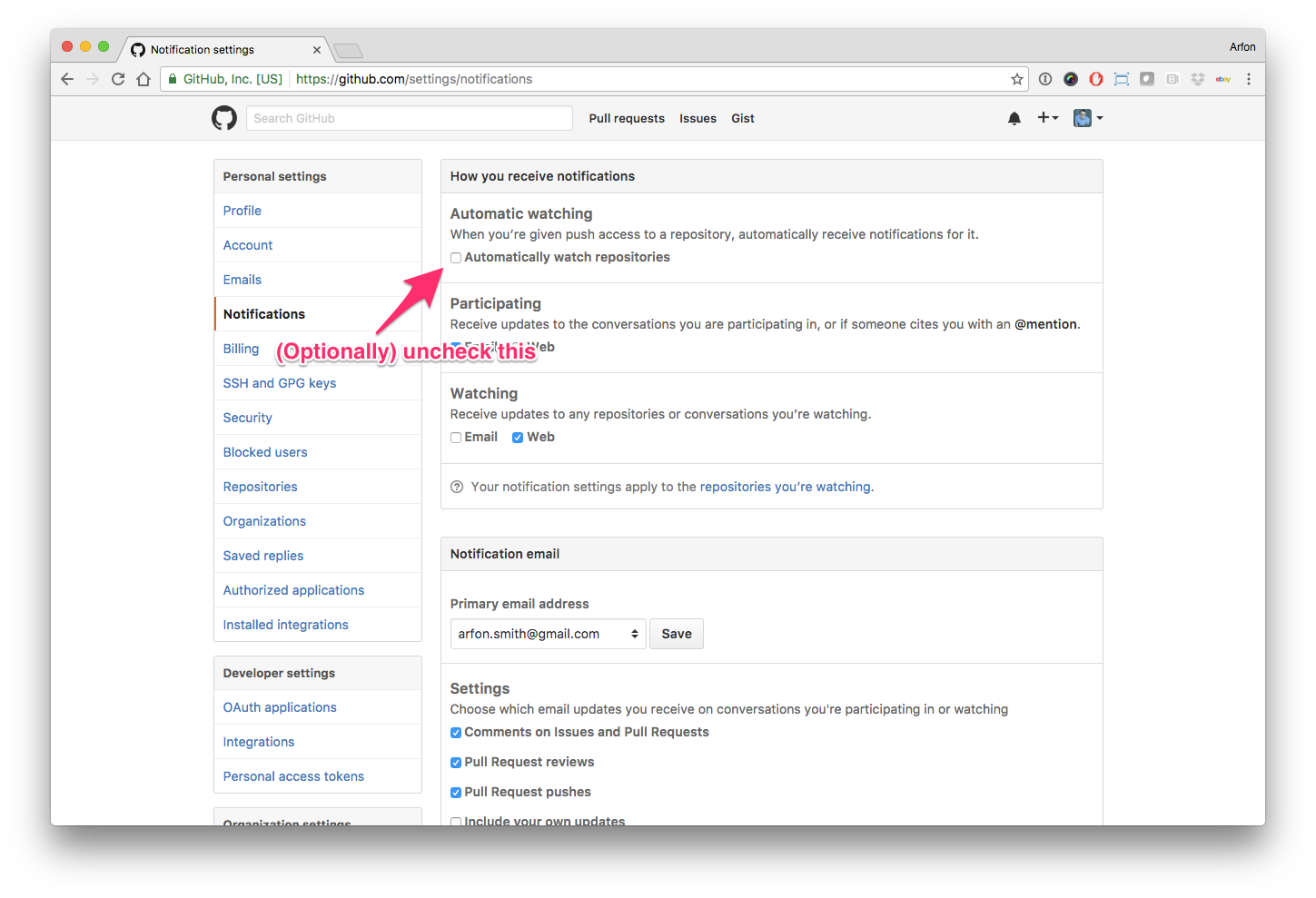

Hello human, I'm @whedon, a robot that can help you with some common editorial tasks. @grlee77 it looks like you're currently assigned as the reviewer for this paper If you haven't already, you should seriously consider unsubscribing from GitHub notifications for this (https://github.com/openjournals/joss-reviews) repository. As a reviewer, you're probably currently watching this repository which means for GitHub's default behaviour you will receive notifications (emails) for all reviews To fix this do the following two things:

For a list of things I can do to help you, just type: |

This comment has been minimized.

This comment has been minimized.

|

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

|

@tyarkoni, I see that you have list your authors as "[LastName], [FirstName]". I'm not sure if that's actually against our rules, but normally authors are just listed as "[FirstName] [LastName]". Is there a particular reason you decided to list them this way? |

This comment has been minimized.

This comment has been minimized.

tyarkoni

commented

Mar 4, 2019

|

I adapted it from the repo's .zenodo.json file. Convention for that seems to be [LastName], [FirstName]. |

This comment has been minimized.

This comment has been minimized.

|

@arfon, do you have any thoughts on the author list formatting here? I don't recall any prior JOSS papers using "[LastName], [FirstName]" formatting, but you'd know better than me--and if we want to require a specific formatting (e.g., for consistency). @grlee77, let me know if you have any questions in starting the review! |

This comment has been minimized.

This comment has been minimized.

Yes, please have author names as a single string (firstname lastname) with any middle initials, e.g. Arfon M Smith |

This comment has been minimized.

This comment has been minimized.

grlee77

commented

Mar 12, 2019

|

I opened a couple of minor issues: and commented on (bids-standard/pybids#245) after an initial read-through of the docs and examples. I plan to finish the checklist and review by tomorrow. |

This comment has been minimized.

This comment has been minimized.

grlee77

commented

Mar 13, 2019

•

|

It looks like the approach here was to include all contributors to the repository. I just had a question about whether all coauthors have already actively confirmed their desire to be included? @cMadan: If past contributors have not been notified and explicitly agreed to be included in the publication then it seems they would need to be removed from the list? The JOSS author guidelines state "The authors themselves assume responsibility for deciding who should be credited with co-authorship, and co-authors must always agree to be listed. In addition, co-authors agree to be accountable for all aspects of the work."

|

This comment has been minimized.

This comment has been minimized.

grlee77

commented

Mar 13, 2019

|

The submitted paper is well written and nicely summarizes the capabilities of the software. The proposed software is likely to be useful to any researchers working with data in the BIDS format and complements an increasingly diverse ecosystem of python-based neuroimaging analysis software. The software functions as advertised. I was able to run the included example notebook without issues and tried out some other variations on the examples given in the documentation. Aside from the minor documentation issues already raised above, the following minor issues in relation to the review criteria above were found: 1.) In regards to the "Community guidelines" criterion, I did not see any mention about how to contribute or raise issues/get help in the documentation. This is likely self-evident to those already working with GitHub/open source, but it would be useful to include something brief to guide new contributors. 2.) Some of the references are missing a DOI in 3.) The software is tested using automated CI across platforms and python versions, but there are currently a handful of test failures on the master branch (Appveyor is listed as failing on the main project page) |

This comment has been minimized.

This comment has been minimized.

grlee77

commented

Mar 13, 2019

|

The following are not specific to review checklist above, but more about two things that were not entirely clear to me as a new user when going through the examples in the documentation. 1.) There was an example using the 2.) In the documentation section on generating reports, it is mentioned that the reports are returned as a main_report = counter.most_common()[0][0]but does not comment on why the indexing [0][0] is needed or when one would typically want or need to use the other elements returned by |

This comment has been minimized.

This comment has been minimized.

tyarkoni

commented

Mar 19, 2019

|

@grlee77 all authors did not actively confirm their desire to be included; instead I gave people the option of opting out (and none did). Does that work? I can also repeat the request, this time requesting opt-in, but I'm concerned that there may be some people who contributed to the repo a while back and aren't paying close attention (I know from experience that there can be a mental hurdle in following up on such requests, even if it only takes a minute or two). Similarly, for some of the authors, I have no contact information aside from GitHub handle, and a few folks don't seem to be active. |

This comment has been minimized.

This comment has been minimized.

tyarkoni

commented

Mar 19, 2019

•

Thanks for catching this. I've added a "Community guidelines" section to the top-level README (see bids-standard/pybids#418).

This is fixed now; see rebuilt PDF below.

We currently don't officially support Windows, so this isn't technically an issue for us (though I realize it doesn't look great). We haven't removed the Appveyor tests in the hopes that someone might spontaneously come along and fix this for us (it will likely require a fairly thorough dive through the codebase), but I've added a note to the README indicating the scope of official support.

This was documented elsewhere (in the

@tsalo, could you comment on this and/or make any required changes? Thanks! |

This comment has been minimized.

This comment has been minimized.

tyarkoni

commented

Mar 19, 2019

|

@whedon generate pdf from branch joss-review |

This comment has been minimized.

This comment has been minimized.

|

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

tyarkoni

commented

Mar 19, 2019

|

@grlee77 I think I've now addressed all of the comments in this thread and on the pybids repo, pending one minor issue that I've asked @tsalo (who authored the code in question) to look at. The joss-review branch collects all changes. Please let me know if there's anything else. Thanks for your effort and excellent feedback! |

This comment has been minimized.

This comment has been minimized.

tsalo

commented

Mar 19, 2019

|

BIDSReport returns a Counter because missing data or variable acquisition parameters across participants can lead to different reports. With a Counter, users can access the most common report, which is likely to be the most accurate in cases with missing data, or they can access everything if different participants had different protocols. I can add this rationale to the documentation somewhere or can make BIDSReport only return the most common report, if you'd like. |

This comment has been minimized.

This comment has been minimized.

tyarkoni

commented

Mar 19, 2019

|

I think adding that rationale to the docstring would work. Feel free to add to the |

This comment has been minimized.

This comment has been minimized.

grlee77

commented

Mar 19, 2019

This comment has been minimized.

This comment has been minimized.

effigies

commented

Mar 19, 2019

|

As another data point, there was a metadata gathering thread (bids-standard/pybids#308) for Zenodo. There were a couple people who never responded, but none who requested not to be authors. I recognize Zenodo isn't JOSS, but if you're looking for active consent to be considered authors on the software (if not the paper), that thread is a worthwhile one. |

This comment has been minimized.

This comment has been minimized.

grlee77

commented

Mar 19, 2019

|

Thanks for addressing the issues @tyarkoni. I reviewed the changes in the joss-review branch and they look good to me. I just checked off the remaining items in the review checklist.

I suppose you could remove the Appveyor badge from the readme until support is officially added, but not a big deal either way |

This comment has been minimized.

This comment has been minimized.

Most of the other JOSS submissions I've reviewed had shorter author lists and everyone was aware of their inclusion. @openjournals/joss-eics, is there any precedence of having JOSS co-authorship be opt-out as described here? |

This comment has been minimized.

This comment has been minimized.

Not that I know of. I'd be curious to hear how you notified them @tyarkoni? |

This comment has been minimized.

This comment has been minimized.

tyarkoni

commented

Mar 23, 2019

•

|

I opened a PR in the repo soliciting feedback, plus we had the earlier discussion related to the Zenodo DOI @effigies linked to above. I could have sworn I explicitly mentioned authorship, but reading the various issues and PRs over, it appears not. Sorry about that! Happy to raise the issue more explicitly, and will tag everyone by name this time. I still feel pretty strongly that making it opt-in will result in several people who are happy to be authors and have made meaningful contributions being left off, but if you prefer opt-in to opt-out, I'm fine with that. We call also trial the former and then make a decision based on feedback. [Edit: FWIW, this seems like an issue that will arise again in future; e.g., I have other repos with a lot of contributors that I plan to submit to JOSS at some point. So it might make sense to address this explicitly in the guidelines one way or the other.] |

This comment has been minimized.

This comment has been minimized.

tyarkoni

commented

Mar 23, 2019

|

Okay, see the request here. I haven't indicated exactly what we'll take a non-response to mean, in the hopes of pushing people to respond, but let's see what happens. |

This comment has been minimized.

This comment has been minimized.

Yes, that’s fine. |

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

effigies

commented

Aug 9, 2019

|

Turns out, @tyarkoni doesn't have the magic touch for Zenodo. It's @yarikoptic who started the archive (bids-standard/pybids#299), so only he has the power. Yarik, would you mind updating the title of https://zenodo.org/record/3333492 (specifically that one) to "PyBIDS: Python tools for BIDS datasets". |

This comment has been minimized.

This comment has been minimized.

yarikoptic

commented

Aug 9, 2019

|

This comment has been minimized.

This comment has been minimized.

effigies

commented

Aug 9, 2019

•

|

I haven't been able to find a way to share super powers on my repositories. We should probably open an issue with Zenodo, because this is extremely inconvenient for projects that don't have a BDFL. Edit: This was reported by a familiar face two years ago. zenodo/zenodo#1325 Related to zenodo/zenodo#35, zenodo/zenodo#151, zenodo/zenodo#163 |

This comment has been minimized.

This comment has been minimized.

effigies

commented

Aug 9, 2019

|

@yarikoptic I wonder if hitting "Publish" instead of "Save" would make a difference. |

This comment has been minimized.

This comment has been minimized.

effigies

commented

Aug 10, 2019

|

@cMadan I'm going to see if I can update the title through our metadata (bids-standard/pybids#470). In which case, we'll go with the 0.9.4 tag. |

This comment has been minimized.

This comment has been minimized.

yarikoptic

commented

Aug 12, 2019

Sorry for the delay!!! I just did it and it published. But now it doesn't show the version in the title. I wonder if I should add it ( |

This comment has been minimized.

This comment has been minimized.

effigies

commented

Aug 12, 2019

|

@yarikoptic The version is listed on the page, so I'm not too worried. @cMadan WDYT? |

This comment has been minimized.

This comment has been minimized.

|

@yarikoptic @effigies, I don't think the version needs to be in the title since it's prominent elsewhere. |

This comment has been minimized.

This comment has been minimized.

|

@whedon set 0.9.3 as version |

This comment has been minimized.

This comment has been minimized.

|

OK. 0.9.3 is the version. |

This comment has been minimized.

This comment has been minimized.

|

@whedon set 0.9.2 as version |

This comment has been minimized.

This comment has been minimized.

|

OK. 0.9.2 is the version. |

This comment has been minimized.

This comment has been minimized.

|

@whedon set 10.5281/zenodo.3333492 as archive |

This comment has been minimized.

This comment has been minimized.

|

OK. 10.5281/zenodo.3333492 is the archive. |

This comment has been minimized.

This comment has been minimized.

|

@openjournals/joss-eics, I think we're all set to accept here! |

This comment has been minimized.

This comment has been minimized.

danielskatz

commented

Aug 12, 2019

|

@whedon accept |

This comment has been minimized.

This comment has been minimized.

|

This comment has been minimized.

This comment has been minimized.

|

Check final proof If the paper PDF and Crossref deposit XML look good in openjournals/joss-papers#896, then you can now move forward with accepting the submission by compiling again with the flag |

This comment has been minimized.

This comment has been minimized.

danielskatz

commented

Aug 12, 2019

|

@whedon accept deposit=true |

This comment has been minimized.

This comment has been minimized.

|

whedon

added

the

accepted

label

whedon

added

the

accepted

label

Aug 12, 2019

This comment has been minimized.

This comment has been minimized.

danielskatz

commented

Aug 12, 2019

This comment has been minimized.

This comment has been minimized.

|

|

This comment has been minimized.

This comment has been minimized.

|

Here's what you must now do:

Any issues? notify your editorial technical team... |

danielskatz

closed this

danielskatz

closed this

Aug 12, 2019

This comment has been minimized.

This comment has been minimized.

|

If you would like to include a link to your paper from your README use the following code snippets: This is how it will look in your documentation: We need your help! Journal of Open Source Software is a community-run journal and relies upon volunteer effort. If you'd like to support us please consider doing either one (or both) of the the following:

|

whedon commentedMar 3, 2019

•

edited

Submitting author: @tyarkoni (Tal Yarkoni)

Repository: https://github.com/bids-standard/pybids

Version: 0.9.2

Editor: @cMadan

Reviewer: @grlee77

Archive: 10.5281/zenodo.3333492

Status

Status badge code:

Reviewers and authors:

Please avoid lengthy details of difficulties in the review thread. Instead, please create a new issue in the target repository and link to those issues (especially acceptance-blockers) in the review thread below. (For completists: if the target issue tracker is also on GitHub, linking the review thread in the issue or vice versa will create corresponding breadcrumb trails in the link target.)

Reviewer instructions & questions

@grlee77, please carry out your review in this issue by updating the checklist below. If you cannot edit the checklist please:

The reviewer guidelines are available here: https://joss.theoj.org/about#reviewer_guidelines. Any questions/concerns please let @cMadan know.

Review checklist for @grlee77

Conflict of interest

Code of Conduct

General checks

Functionality

Documentation

Software paper

paper.mdfile include a list of authors with their affiliations?