Trip Report: SIGMOD/PODS 2019

It’s not so frequently that you get a major international conference in your area of interest around the corner from your house. Luckily for me, that just happened. From June 30th – July 5th, SIGMOD/PODS was hosted here in Amsterdam. SIGMOD/PODS is one of the major conferences on databases and data management. Before diving into the event itself, I really wanted to thank Peter Boncz, Stefan Manegold, Hannes Mühleisen and the whole organizing team (from @CWI_DA and the NL DB community) for getting this massive conference here:

and pulling off things like this:

Oh and really nice badges too: Good job!

Good job!

Surprisingly, this was the first time I’ve been at SIGMOD. While I’m pretty acquainted with the database literature, I’ve always just hung out in different spots. Hence, I had some trepidation attending wondering if I’d fit in? Who would I talk to over coffee? Would all the papers be about join algorithms or implications of cache misses on some new tree data structure variant? Now obviously this is all pretty bogus thinking, just looking at the proceedings would tell you that. But there’s nothing like attending in person to bust preconceived notions. Yes, there were papers on hardware performance and join algorithms – which were by the way pretty interesting – but there were many papers on other data management problems many of which we are trying to tackle (e.g. provenance, messy data integration). Also, there were many colleagues that I knew (e.g. Olaf & Jeff above). Anyway, perceptions busted! Sorry DB friends you might have to put up with me some more 😀.

I was at the conference for the better part of 6 days – that’s a lot of material – so I definitely missed a lot but here are the four themes I took from the conference.

- Data management for machine learning

- Machine learning for data management

- New applications of provenance

- Software & The Data Center Computer

Data Management for Machine Learning

Matei Zaharia (Stanford/Databricks) on the need for data management for ML

The success of machine learning has rightly changed computer science as a field. In particular, the data management community writ large has reacted trying to tackle the needs of machine learning practitioners with data management systems. This was a major theme at SIGMOD.

There were a number of what I would term holistic systems that helped manage and improve the process of building ML pipelines including using data. Snorkel DryBell provides a holistic system that lets engineers employ external knowledge (knowledge graphs, dictionaries, rules) to reduce the number of needed training examples needed to create new classifiers. Vizier provides a notebook data science environment backed fully by a provenance data management environment that allows data science pipelines to be debugged and reused. Apple presented their in-house system for helping data management specifically designed for machine learning – from my understanding all their data is completely provenance enabled – ensuring that ML engineers know exactly what data they can use for what kinds of model building tasks.

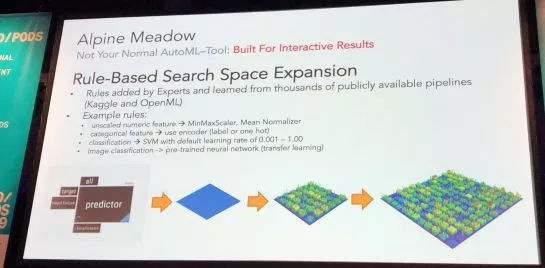

I think the other thread here is the use of real world datasets to drive these systems. The example that I found the most compelling was Alpine Meadow++ to use knowledge about ML datasets (e.g. Kaggle) to improve the suggestion on new ML pipelines in an AutoML setting.

On a similar note, I thought the work of Suhail Rehman from the University of Chicago on using over 1 million juypter notebooks to understand data analysis workflows was particularly interesting. In general, the notion is that we need to taking a looking at the whole model building and analysis problem in a holistic sense inclusive of data management . This was emphasized by the folks doing the Magellan entity matching project in their paper on Entity Matching Meets Data Science.

Machine Learning for Data Management

On the flip side, machine learning is rapidly influencing data management itself. The aforementioned Megellan project has developed a deep learning entity matcher. Knowledge graph construction and maintenance is heavily reliant on ML. (See also the new work from Luna Dong & colleagues which she talked about at SIGMOD). Likewise, ML is being used to detect data quality issues (e.g. HoloDetect).

ML is also impacting even lower levels of the data management stack.

Tim Kraska list of algorithms that are or are being MLified

I went to the tutorial on Learned Data-intensive systems from Stratos Idreos and Tim Kraska. They overviewed how machine learning could be used to replace parts or augment of the whole database system and when that might be useful.

It was quite good, I hope they put the slides up somewhere. The key notion for me is this idea of instance optimality: by using machine learning we can tailor performance to specific users and applications whereas in the past this was not cost effective because the need for programmer effort. They suggested 4 ways to create instance optimized algorithms and data structures:

It was quite good, I hope they put the slides up somewhere. The key notion for me is this idea of instance optimality: by using machine learning we can tailor performance to specific users and applications whereas in the past this was not cost effective because the need for programmer effort. They suggested 4 ways to create instance optimized algorithms and data structures:

- Synthesize traditional algorithms using a model

- Use a CDF model of the data in your system to tailor the algorithm

- Use a prediction model as part of your algorithm

- Try to to learn the entire algorithm or data structure

They had quite the laundry list of recent papers tackling this approach and this seems like a super hot topic.

Another example was SkinnerDb which uses reinforcement learning to on the fly to learn optimal join ordering. I told you there were papers on joins that were interesting.

New Provenance Applications

There was an entire session of SIGMOD devoted to provenance, which was cool. What I liked about the papers was that that they had several new applications of provenance or optimizations for applications beyond auditing or debugging.

- Explain surprising results to users – Zhengjie Miao, Qitian Zeng, Boris Glavic, and Sudeepa Roy. 2019. Going Beyond Provenance: Explaining Query Answers with Pattern-based Counterbalances. In Proceedings of the 2019 International Conference on Management of Data (SIGMOD ’19). ACM, New York, NY, USA, 485-502. DOI: https://doi.org/10.1145/3299869.3300066

- Creating small counterexamples to help with debugging – Zhengjie Miao, Sudeepa Roy, and Jun Yang. 2019. Explaining Wrong Queries Using Small Examples. In Proceedings of the 2019 International Conference on Management of Data (SIGMOD ’19). ACM, New York, NY, USA, 503-520. DOI: https://doi.org/10.1145/3299869.3319866

- Suggestion optimizations for graph analytics – Vicky Papavasileiou, Ken Yocum, and Alin Deutsch. 2019. Ariadne: Online Provenance for Big Graph Analytics. In Proceedings of the 2019 International Conference on Management of Data (SIGMOD ’19). ACM, New York, NY, USA, 521-536. DOI: https://doi.org/10.1145/3299869.3300091 – they also do a cool thing where they execute the provenance query in combination with the graph analytics query removing overhead.

- Hypothetical reasoning – what happens if I modify this data to a query that I’ve already run – Daniel Deutch, Yuval Moskovitch, and Noam Rinetzky. 2019. Hypothetical Reasoning via Provenance Abstraction. In Proceedings of the 2019 International Conference on Management of Data (SIGMOD ’19). ACM, New York, NY, USA, 537-554. DOI: https://doi.org/10.1145/3299869.3300084

In addition to these new applications, I saw some nice new provenance capture systems:

- C2Metadata – capturing provenance from statistical scripts and creating documentation

- Pebble – capturing provenance for nested data in Spark

- Ursprung – system-level provenance capture with rule based configuration

Software & The Data Center Computer

This is less of a common theme but something that just struck me. Microsoft discussed their upgrade or overhaul of the database as a service that they offer in Azure. Likewise, Apple discussed FoundationDB – the mult-tenancy database that underlines CloudKit.

JD.com discussed their new file system to deal with containers and ML workloads across clusters with tens of thousands of servers. These are not applications that are hosted in the cloud but instead they assume the data center. These applications are fundamentally designed with the idea that they will be executed on a big chunk of an entire data center. I know my friends at super computing have been doing this for ages but I always wonder how to change one’s mindset to think about building applications that big and not only building them but upgrading & maintaining them as well.

Wrap-up

Overall, this was a fantastic conference. Beyond the excellent technical content, from a personal point of view, it was really eye opening to marinate in the community. From the point of view of the Amsterdam tech community, it was exciting to have an Amsterdam Data Science Meetup with over 500 people.

If you weren’t there, video of much of the event is available.

Random Notes

- Note to conference organizers – nice badges are appreciated [1,2].

- Default conference languages are interesting. SIGMOD/PODS assumption: all conversations can build from SQL. ISWC assumption: all conversations can build from RDF/SPARQL/OWL/HTTP. NLP assumption: all conversations can build up from shared task X.

- Blockchain brain dump

- DARPA Data Driven Discovery of Models

- Webish Tables

- JOSIE: Overlap Set Similarity Search for Finding Joinable Tables in Data Lakes

- Profiling the semantics of n-ary web tables

- Automatically Generating Interesting Facts from Wikipedia Tables – nice to see the call out to the Web Tables work from Bizer and the Semweb community in general.

- Scaling CSV parsing

- http://dataspread.github.io

- Compute and memory requirements for deep learning

- Let’s not just talk about fairness – we can do things about it:

- Cool to have a beer with Frank McSherry and Peter Boncz and listen to them talk about the implications of PCI express lane bandwidth and cache misses on DB performance.

- Oh and micro-services + provenance + organizational mergers = 💡

- Lots of moves on the convergence of graph query languages

- Maybe all conferences should just be 10 minutes away from my house 😉

- If you want to understand differential privacy – watch the amazing talk from the amazing Cynthia Dwork keynote.

- Juan Sequeda – ahead of the curve: