Join GitHub today

GitHub is home to over 40 million developers working together to host and review code, manage projects, and build software together.

Sign up[REVIEW]: LISC: A Python Package for Scientific Literature Collection and Analysis #1674

Comments

This comment has been minimized.

This comment has been minimized.

|

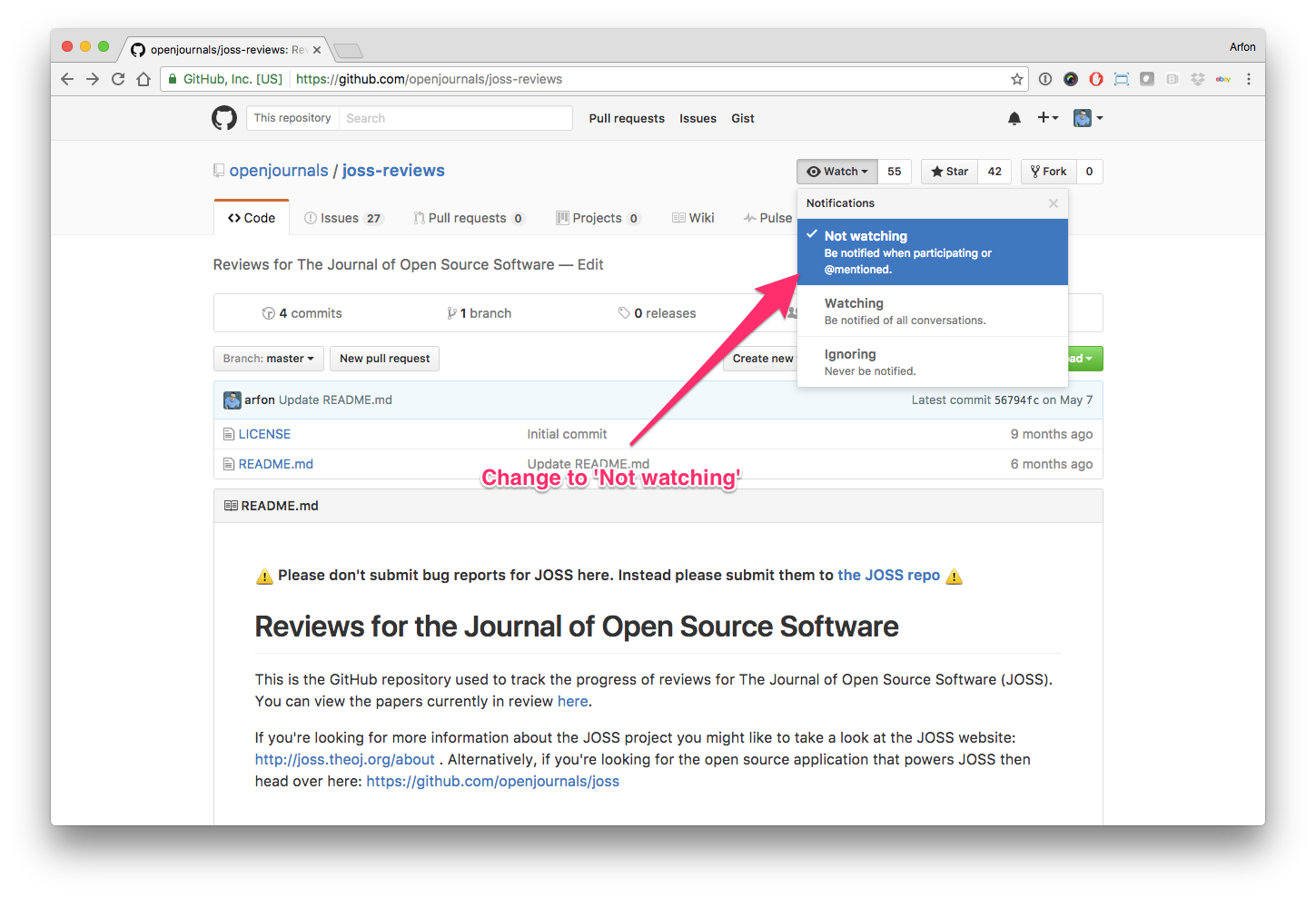

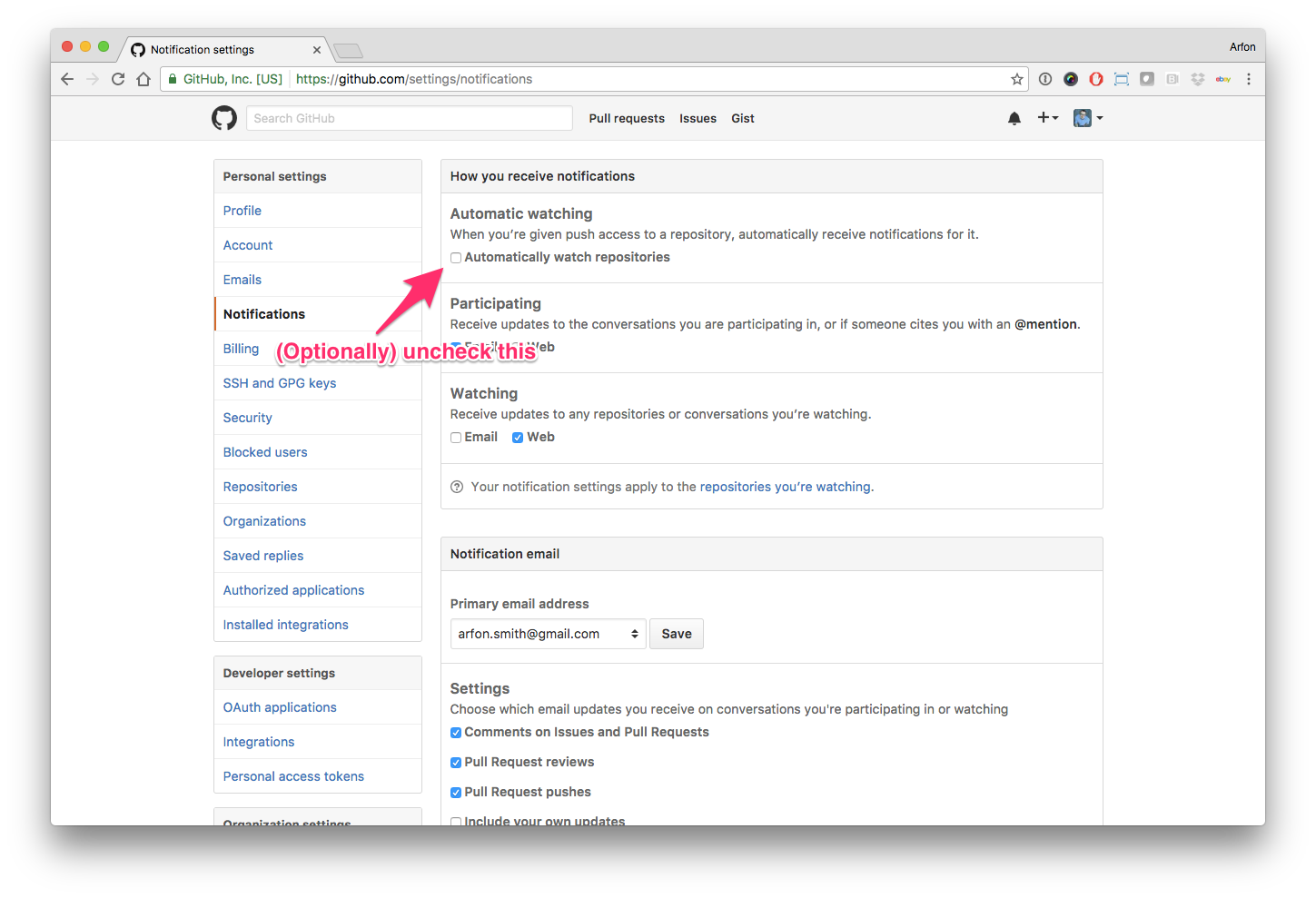

Hello human, I'm @whedon, a robot that can help you with some common editorial tasks. @timClicks, @linuxscout it looks like you're currently assigned to review this paper If you haven't already, you should seriously consider unsubscribing from GitHub notifications for this (https://github.com/openjournals/joss-reviews) repository. As a reviewer, you're probably currently watching this repository which means for GitHub's default behaviour you will receive notifications (emails) for all reviews To fix this do the following two things:

For a list of things I can do to help you, just type: For example, to regenerate the paper pdf after making changes in the paper's md or bib files, type: |

This comment has been minimized.

This comment has been minimized.

|

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

danielskatz

commented

Aug 22, 2019

•

|

|

whedon

assigned

linuxscout and

timClicks

whedon

assigned

linuxscout and

timClicks

Aug 23, 2019

This comment has been minimized.

This comment has been minimized.

|

Thanks @danielskatz. I'm also away until the middle of next week. I will aim to have my review completed by next Friday. |

This comment has been minimized.

This comment has been minimized.

|

I work on review |

This comment has been minimized.

This comment has been minimized.

danielskatz

commented

Sep 1, 2019

|

|

This comment has been minimized.

This comment has been minimized.

danielskatz

commented

Sep 1, 2019

|

@whedon remind @timClicks in 6 days |

This comment has been minimized.

This comment has been minimized.

|

Reminder set for @timClicks in 6 days |

This comment has been minimized.

This comment has been minimized.

|

I work on testing the software. |

This comment has been minimized.

This comment has been minimized.

|

No automated tests |

This comment has been minimized.

This comment has been minimized.

danielskatz

commented

Sep 1, 2019

|

As we say in the review criteria

So we do not require automated tests (though we encourage them), but we do require some tests. In this case, if there are manual tests that you think are sufficient "to objectively check the expected functionality of the software (e.g. a sample input file to assert behavior)", that's enough for this criterion to be checked. |

This comment has been minimized.

This comment has been minimized.

|

I think that tutorials are good as tests. |

This comment has been minimized.

This comment has been minimized.

TomDonoghue

commented

Sep 1, 2019

•

|

There are tests! In the folder lisc/tests is a set of unit-tests. So, from the repository home, they are in They are, I would say often smoke-tests, in that there is not always strong accuracy checking, but there are at least sanity check tests. They are written with pytest. If you want to run them locally, then, with pytest installed, from inside the lisc code folder (so in I realize now it's not really documented anywhere how to run the tests. Is there a convention on how / where to note tests and how to run them? Tests are also integrated with travis: |

This comment has been minimized.

This comment has been minimized.

danielskatz

commented

Sep 1, 2019

|

You might add this info to the README, but certainly should add it to https://lisc-tools.github.io/lisc/, perhaps after installation (after you install, you test to make sure things are working...) |

This comment has been minimized.

This comment has been minimized.

|

What I should do now? |

This comment has been minimized.

This comment has been minimized.

danielskatz

commented

Sep 6, 2019

Nothing - your part is done, and thanks! We're now waiting for @timClicks to do his review, check off his items, bring up needed changes, etc. He was away, but said he would have his review done around now. I think we're also waiting for @TomDonoghue to add something about how to run the tests to the documentation. |

This comment has been minimized.

This comment has been minimized.

|

|

This comment has been minimized.

This comment has been minimized.

|

@danielskatz My recommendation: accept, subject to the test amendments that have been mentioned already :) I've worked through the tutorials, and think that this a very handy package. In general, the API documentation is in an OK state, rather than a Good state (as per the review criteria). That reflects the relative immaturity of the project. lisc is in a much better state than other packages that are released at a 0.1 state. As a side comment, the tests are written using a very common test framework for Python applications (pytest). However, this isn't included as a dependency in the requirements.txt file so installing the test framework manually is required. @TomDonoghue thanks for taking the time to submit this to JOSS. @danielskatz @linuxscout @TomDonoghue Sincerely apologise for delaying this process. |

This comment has been minimized.

This comment has been minimized.

danielskatz

commented

Sep 8, 2019

|

|

This comment has been minimized.

This comment has been minimized.

TomDonoghue

commented

Sep 9, 2019

|

@whedon generate pdf |

This comment has been minimized.

This comment has been minimized.

|

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

TomDonoghue

commented

Sep 9, 2019

|

@whedon generate pdf |

This comment has been minimized.

This comment has been minimized.

|

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

TomDonoghue

commented

Sep 9, 2019

|

@whedon generate pdf |

This comment has been minimized.

This comment has been minimized.

|

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

TomDonoghue

commented

Sep 9, 2019

|

Test Coverage In terms of noting the test coverage, what I have done is add a section to the README, which gets built as the homepage of the documentation page, which mentions the tests, and how to run them, and the test dependency of pytest. I'm actually not too sure what is considered convention / best practice for documenting tests & test coverage - so please let me know if this isn't the best answer! I haven't, for example, typically listed 'pytest' in the API Documentation Paper Notes Thanks! A huge thank you to @linuxscout & @timClicks for doing the review, and @danielskatz for managing! Please let me know if there is anything else I should do! |

This comment has been minimized.

This comment has been minimized.

danielskatz

commented

Sep 9, 2019

|

|

This comment has been minimized.

This comment has been minimized.

|

@danielskatz LGTM. I've reviewed the new paper and the new README. Both look fine. |

This comment has been minimized.

This comment has been minimized.

danielskatz

commented

Sep 13, 2019

|

|

This comment has been minimized.

This comment has been minimized.

danielskatz

commented

Sep 17, 2019

|

|

This comment has been minimized.

This comment has been minimized.

|

Hi, |

This comment has been minimized.

This comment has been minimized.

danielskatz

commented

Sep 18, 2019

|

This comment has been minimized.

This comment has been minimized.

danielskatz

commented

Sep 18, 2019

|

@whedon generate pdf |

This comment has been minimized.

This comment has been minimized.

|

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

danielskatz

commented

Sep 18, 2019

|

@whedon check references |

This comment has been minimized.

This comment has been minimized.

|

This comment has been minimized.

This comment has been minimized.

|

whedon commentedAug 22, 2019

•

edited by timClicks

Submitting author: @TomDonoghue (Thomas Donoghue)

Repository: https://github.com/lisc-tools/lisc

Version: v0.1.0

Editor: @danielskatz

Reviewer: @timClicks, @linuxscout

Archive: Pending

Status

Status badge code:

Reviewers and authors:

Please avoid lengthy details of difficulties in the review thread. Instead, please create a new issue in the target repository and link to those issues (especially acceptance-blockers) by leaving comments in the review thread below. (For completists: if the target issue tracker is also on GitHub, linking the review thread in the issue or vice versa will create corresponding breadcrumb trails in the link target.)

Reviewer instructions & questions

@timClicks & @linuxscout, please carry out your review in this issue by updating the checklist below. If you cannot edit the checklist please:

The reviewer guidelines are available here: https://joss.readthedocs.io/en/latest/reviewer_guidelines.html. Any questions/concerns please let @danielskatz know.

Review checklist for @timClicks

Conflict of interest

Code of Conduct

General checks

Functionality

Documentation

Software paper

paper.mdfile include a list of authors with their affiliations?Review checklist for @linuxscout

Conflict of interest

Code of Conduct

General checks

Functionality

Documentation

Software paper

paper.mdfile include a list of authors with their affiliations?