Join GitHub today

GitHub is home to over 40 million developers working together to host and review code, manage projects, and build software together.

Sign up[REVIEW]: PyUoI: The Union of Intersections Framework in Python #1799

Comments

This comment has been minimized.

This comment has been minimized.

|

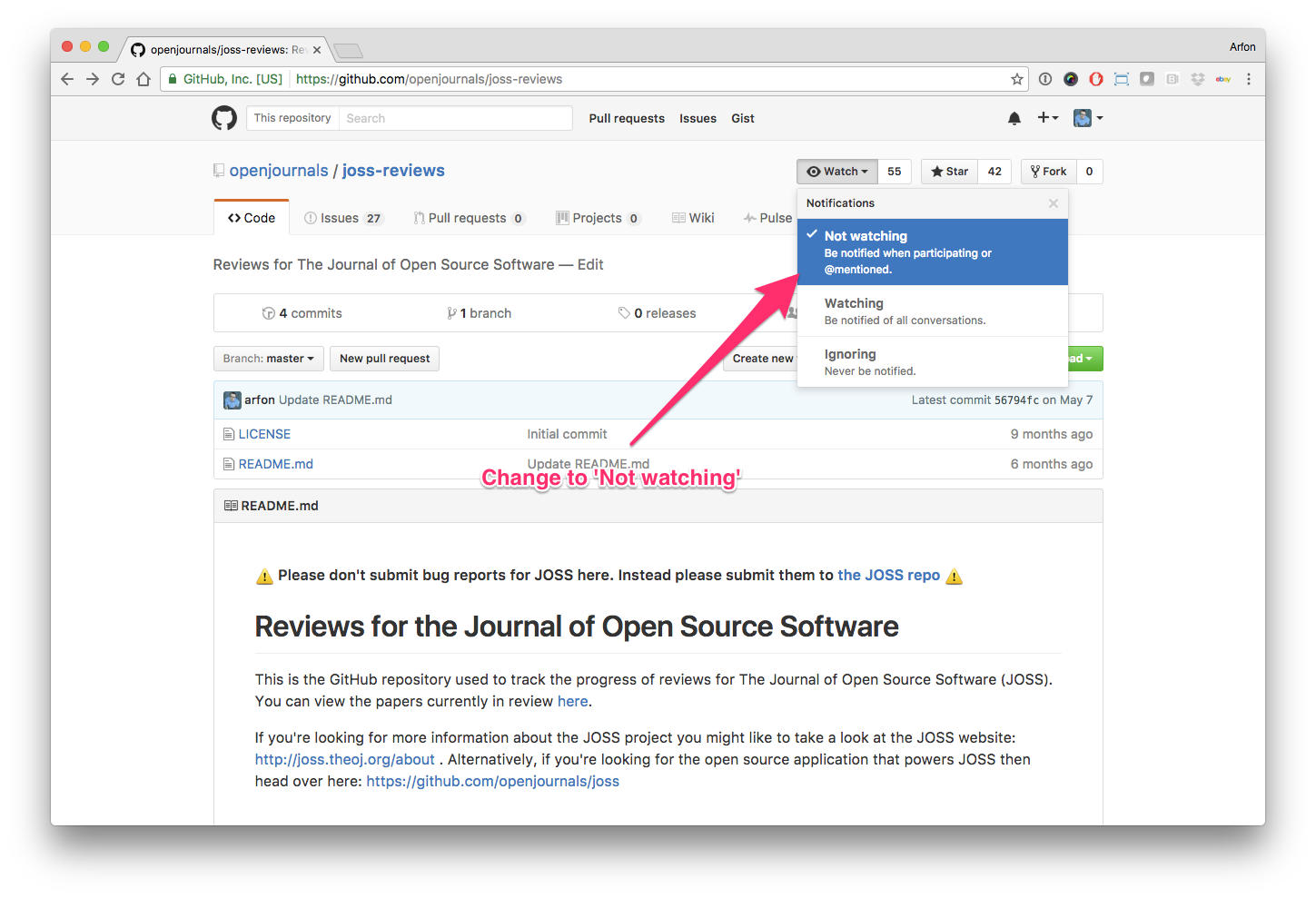

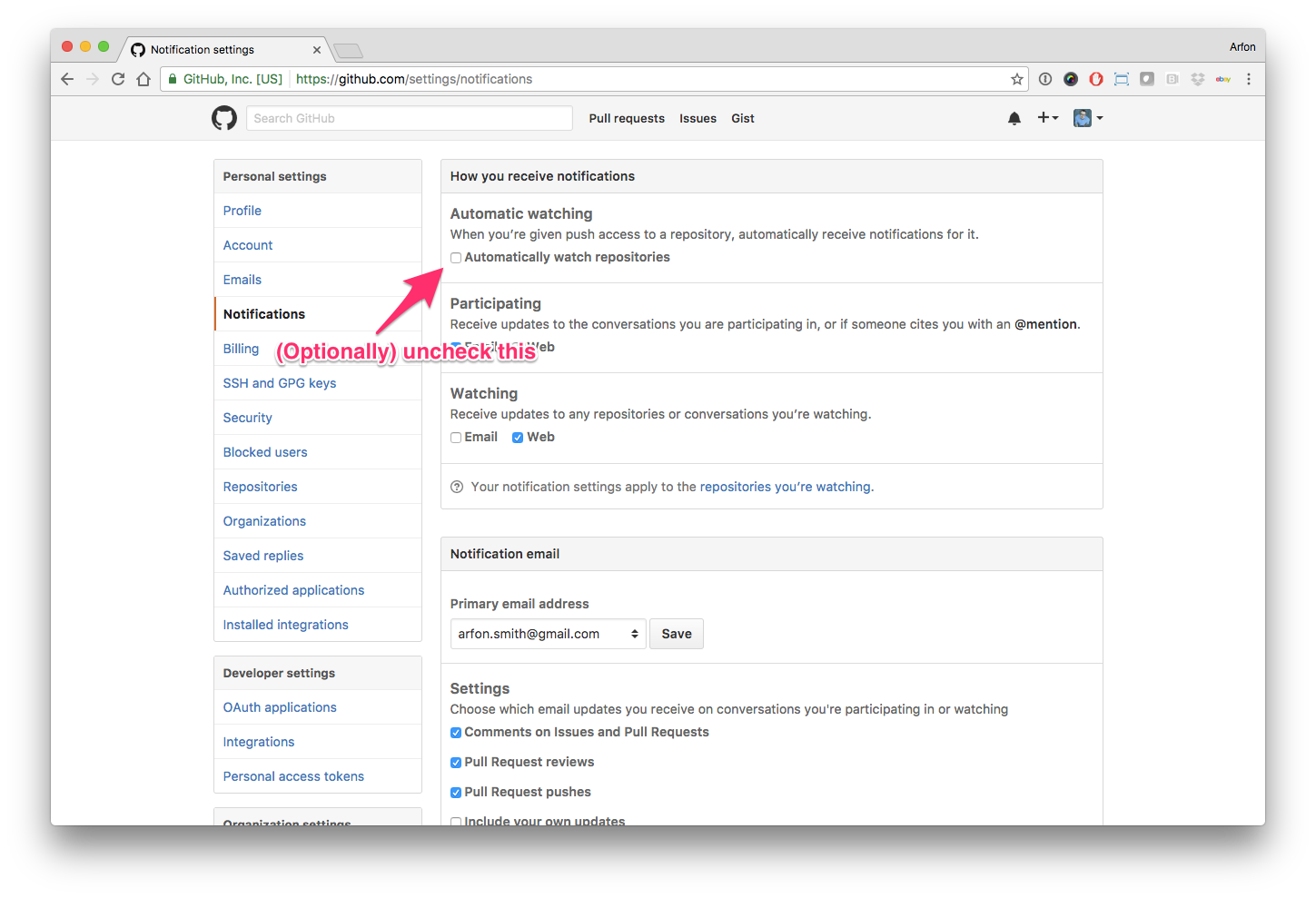

Hello human, I'm @whedon, a robot that can help you with some common editorial tasks. @puolival, @yzhao062 it looks like you're currently assigned to review this paper If you haven't already, you should seriously consider unsubscribing from GitHub notifications for this (https://github.com/openjournals/joss-reviews) repository. As a reviewer, you're probably currently watching this repository which means for GitHub's default behaviour you will receive notifications (emails) for all reviews To fix this do the following two things:

For a list of things I can do to help you, just type: For example, to regenerate the paper pdf after making changes in the paper's md or bib files, type: |

This comment has been minimized.

This comment has been minimized.

|

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

|

Hi @puolival & @yzhao062, just a reminder that this will be the place to conduct the official review on. Please go ahead and review when you get a chance and ping the submitting author @pssachdeva or me if you have any questions! |

This comment has been minimized.

This comment has been minimized.

|

@pssachdeva (@terrytangyuan for some reason I could mention Pratik in this thread). It is a good package and I just did some initial tests and crosses some items from the list. Currently, I have three major questions:

Other than that, the installation has instruction and it is easy (via pip). CI is enabled and codecov is in place. I could also find the contribution guide (https://pyuoi.readthedocs.io/en/latest/contributing.html). I look forward to your confirmation on the license, example place/instruction, and the result of the doc test. I will go through the rest review processes at the same time. Cheers! |

This comment has been minimized.

This comment has been minimized.

|

Hi @yzhao062, Thanks for your comments.

We used the Lawrence Berkeley National Labs BSD variant license, whose short identifier is "BSD-3-Clause-LBNL". The license is the same as BSD-3-Clause, but with an additional default contribution licensing clause. See here for more details.

This might be happening because we didn't have a

Thanks for pointing this out. We reorganized our Sphinx Gallery directories so that the scripts are located in a top-level

Could you tell us how you installed the package and which import errors you received? Depending on the install method, we might need to update our

We don't have any code in our docstrings that needs to be tested, so running that command returns no errors with no tests run. However, this point did make us realize that our continuous integration had not been testing whether the documentation was being compiled correctly. Thus, our newest pull request should add that functionality to our pipeline. |

This comment has been minimized.

This comment has been minimized.

|

Checklist The software is well written and documented. I did encounter some issues, but I think they should be easy to solve, and seem to be related to first uses outside the original development environment. In particular, the installation failed for me, but I was still able to work around it by manual work and testing several different environments. I am quite sure that all issues I faced were due to conflicting version numbers. Please make it visible on the requirement lists and the installation instructions page which version numbers of each used package are supported, and which Python version the user is supposed to have. To give an example, in one of my environments, I received errors like this: Regarding the license, I was not able to figure out whether it is OSI approved or not. However, this issue seems to have been already handled with the other reviewer. Manuscript The manuscript is overall well-written, concise, and clear. The language is good and sufficient background is provided to put the work in context. However, I would suggest expanding the section on features. In particular, please consider explaining the example algorithm to the reader, as well as providing a description of how complete the software is at the moment. For example, do the included models cover a large part of typical use-cases? How were they selected? It would be very helpful if this somehow allowed the user to evaluate if her specific analysis/use-case is supported or not. I also have a number of questions, which I think do not necessitate more than minor changes to the manuscript:

|

This comment has been minimized.

This comment has been minimized.

|

Hi @puolival, Thanks for your comments. Checklist

As you stated, the Python version may be the source of the errors you encountered. We have opted to not support Python 2.7 since it is losing support in 2020. However, we do not explicitly state this in either the documentation or the README. We have made it explicit in the documentation and README that PyUoI is tested and supported for Python 3.5+. Additionally, we have clarified the minimum version numbers for the required packages in PyUoI, both in the

We used the BSD-3-Clause-LBNL license, which is OSI approved. See here for the description on the OSI webpage. Manuscript

Could you elaborate on this? Our manuscript includes the pseudocode for a detailed description of the algorithm, and the Background section provides a more general description of the framework (with key steps highlighted in the pseudocode for clarity).

We have added a paragraph in the Features section clarifying our choice of generalized linear models. We chose these because they are the most commonly used models in a variety of disciplines, particularly neuroscience and bioinformatics which are our active fields of research.

There are competing approaches to obtaining sparse parameter estimates to specific types of models, such as the linear regression model or poisson regression model (e.g. coordinate descent in glmnet). UoI was compared to a battery of other approaches for linear regression in the cited NeurIPS paper.

Thank you for pointing this out. We have added the following text in the Summary to better clarify the scalability:

We have clarified the shapes of the vectors in the Background section to emphasize that they are column vectors. Additionally, we have bolded all vectors to add further clarity.

We note that the formulation of the Lasso problem makes no constraints on the exact level of sparsity. Indeed, determining the optimal lambda value is often part of the model estimation procedure. Thus, if the true parameters are more dense, the UoI procedure will obtain more dense parameter estimates. The original Union of Intersections paper examined its performance across a variety of underlying distributions of model parameters, degrees of sparsity, and noise levels, finding superior performance compared to a battery of other algorithms. See the appendix of the paper for more details.

The Union of Intersections framework is capable of obtaining sparse and predictive fits for parametric models. We address the capabilities of UoI in the second paragraph of the Summary, where we state that:

We and our collaborators conduct research in these fields. Neuroscience and genomics datasets are often high-dimensional with structure that is well-suited to the enforcement of a sparsity prior.

This language may have been too strong. The Union of Intersections framework is agnostic to the solver. For example, the UoI Lasso approach requires obtaining (many) solutions to Lasso optimization problems (on resampled datasets), which could be obtained via coordinate descent, gradient descent, orthant-wise LBFGS, etc. The type of solver is not really important insofar as it provides good estimates. With this in mind, we have structured PyUoI to be extendable to other solvers. For example, UoI Lasso can use a coordinate descent solver (obtained from

Thank you for bringing this up. We have added these references to the paper. |

This comment has been minimized.

This comment has been minimized.

|

@whedon generate pdf |

This comment has been minimized.

This comment has been minimized.

|

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

|

I just read the manuscript and it looks good to me. The author also addresses my previous concern in the post above. As a result, I am happy to recommend the paper for acceptance at JOSS :) |

This comment has been minimized.

This comment has been minimized.

|

@whedon check references |

This comment has been minimized.

This comment has been minimized.

|

This comment has been minimized.

This comment has been minimized.

|

This comment has been minimized.

This comment has been minimized.

|

@pssachdeva Could you fix the missing DOIs mentioned above? |

This comment has been minimized.

This comment has been minimized.

|

@pssachdeva About the algorithm: I was thinking about providing a more detailed explanation of the algorithm's main steps for the less mathematically inclined reader, geared towards providing a conceptual understanding. @pssachdeva @terrytangyuan I have no further comments to the manuscript or the software. I think the last point above can be satisfactorily addressed with minor changes, and doesn't require a re-reading. |

This comment has been minimized.

This comment has been minimized.

|

@whedon generate pdf |

This comment has been minimized.

This comment has been minimized.

|

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

|

@whedon check references |

This comment has been minimized.

This comment has been minimized.

|

This comment has been minimized.

This comment has been minimized.

|

This comment has been minimized.

This comment has been minimized.

|

Thanks everyone! @pssachdeva At this point could you make a new release of this software that includes the changes that have resulted from this review. Then, please make an archive of the software in Zenodo/figshare/other service and update this thread with the DOI of the archive? For the Zenodo/figshare archive, please make sure that:

|

This comment has been minimized.

This comment has been minimized.

|

@whedon generate pdf |

This comment has been minimized.

This comment has been minimized.

|

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

|

@terrytangyuan Here is the DOI for the package, which we uploaded on Zenodo: 10.5281/zenodo.3563146. Here's a link to the Zenodo page. |

This comment has been minimized.

This comment has been minimized.

|

Edited the above to the "all versions" DOI. |

This comment has been minimized.

This comment has been minimized.

|

JOSS wants the DOI associated with the specific version that is being discussed in this paper, not the “concept” or “all versions” DOI |

This comment has been minimized.

This comment has been minimized.

|

@terrytangyuan @danielskatz OK, here's the DOI for the version discussed in the paper: 10.5281/zenodo.3563147 |

This comment has been minimized.

This comment has been minimized.

|

@whedon set 1.0.0 as version |

This comment has been minimized.

This comment has been minimized.

|

OK. 1.0.0 is the version. |

This comment has been minimized.

This comment has been minimized.

|

@whedon set 10.5281/zenodo.3563147 as archive |

This comment has been minimized.

This comment has been minimized.

|

OK. 10.5281/zenodo.3563147 is the archive. |

This comment has been minimized.

This comment has been minimized.

|

@whedon accept |

This comment has been minimized.

This comment has been minimized.

|

This comment has been minimized.

This comment has been minimized.

|

This comment has been minimized.

This comment has been minimized.

|

Check final proof If the paper PDF and Crossref deposit XML look good in openjournals/joss-papers#1156, then you can now move forward with accepting the submission by compiling again with the flag |

This comment has been minimized.

This comment has been minimized.

|

@danielskatz The paper looks good to me now. Could you take it from here? |

This comment has been minimized.

This comment has been minimized.

|

Yes, but in the future, please mention @openjournals/jose-eics in such requests |

This comment has been minimized.

This comment has been minimized.

|

@pssachdeva - please merge BouchardLab/pyuoi#193 and check to make sure all the cases are correct in the references. |

Submitting author: @pssachdeva (Pratik Sachdeva)

Repository: https://github.com/BouchardLab/pyuoi

Version: 1.0.0

Editor: @terrytangyuan

Reviewer: @puolival, @yzhao062

Archive: 10.5281/zenodo.3563147

Status

Status badge code:

Reviewers and authors:

Please avoid lengthy details of difficulties in the review thread. Instead, please create a new issue in the target repository and link to those issues (especially acceptance-blockers) by leaving comments in the review thread below. (For completists: if the target issue tracker is also on GitHub, linking the review thread in the issue or vice versa will create corresponding breadcrumb trails in the link target.)

Reviewer instructions & questions

@puolival & @yzhao062, please carry out your review in this issue by updating the checklist below. If you cannot edit the checklist please:

The reviewer guidelines are available here: https://joss.readthedocs.io/en/latest/reviewer_guidelines.html. Any questions/concerns please let @terrytangyuan know.

Review checklist for @puolival

Conflict of interest

Code of Conduct

General checks

Functionality

Documentation

Software paper

Review checklist for @yzhao062

Conflict of interest

Code of Conduct

General checks

Functionality

Documentation

Software paper