Join GitHub today

GitHub is home to over 40 million developers working together to host and review code, manage projects, and build software together.

Sign up[REVIEW]: PyEscape #2072

[REVIEW]: PyEscape #2072

Comments

This comment has been minimized.

This comment has been minimized.

|

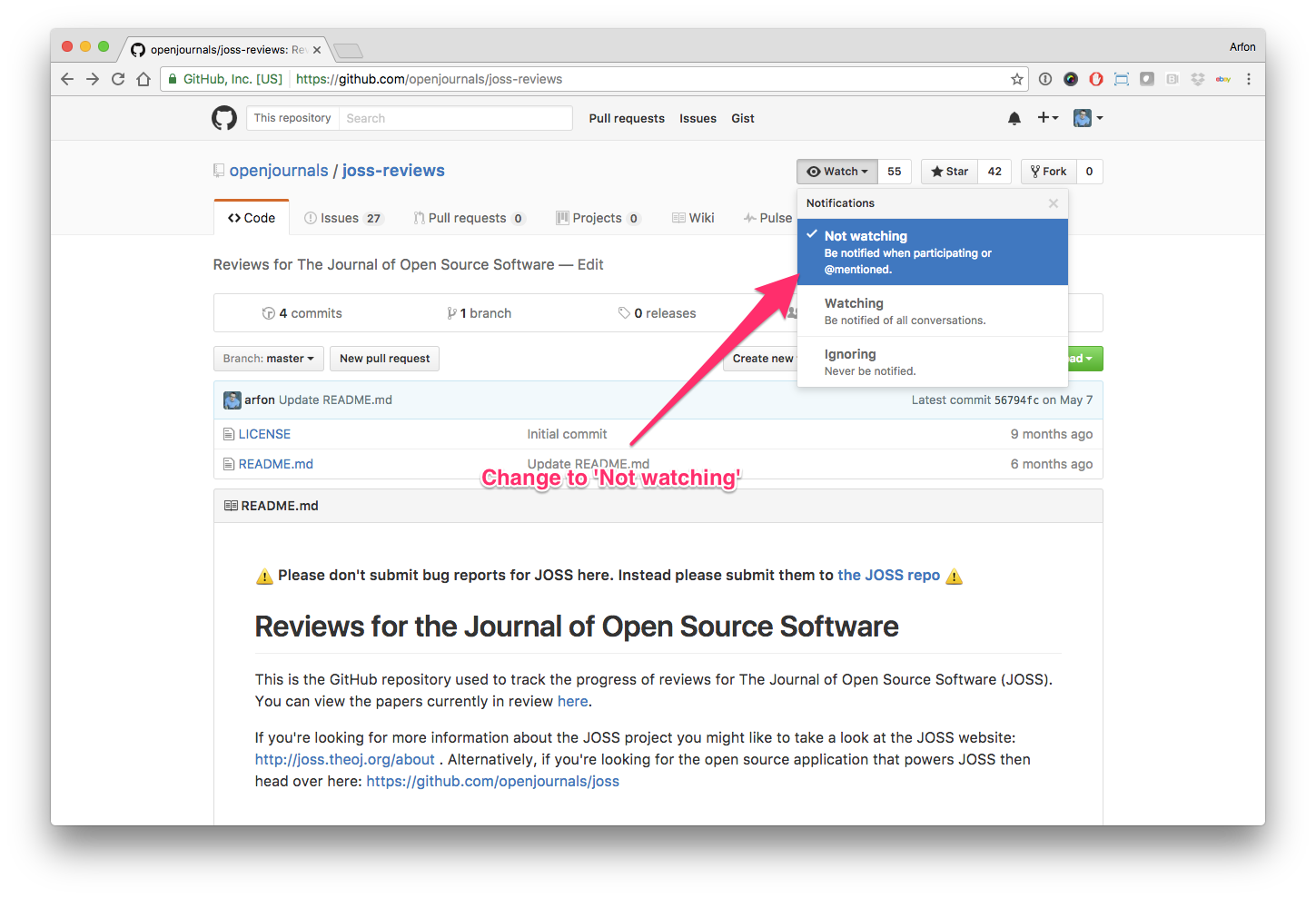

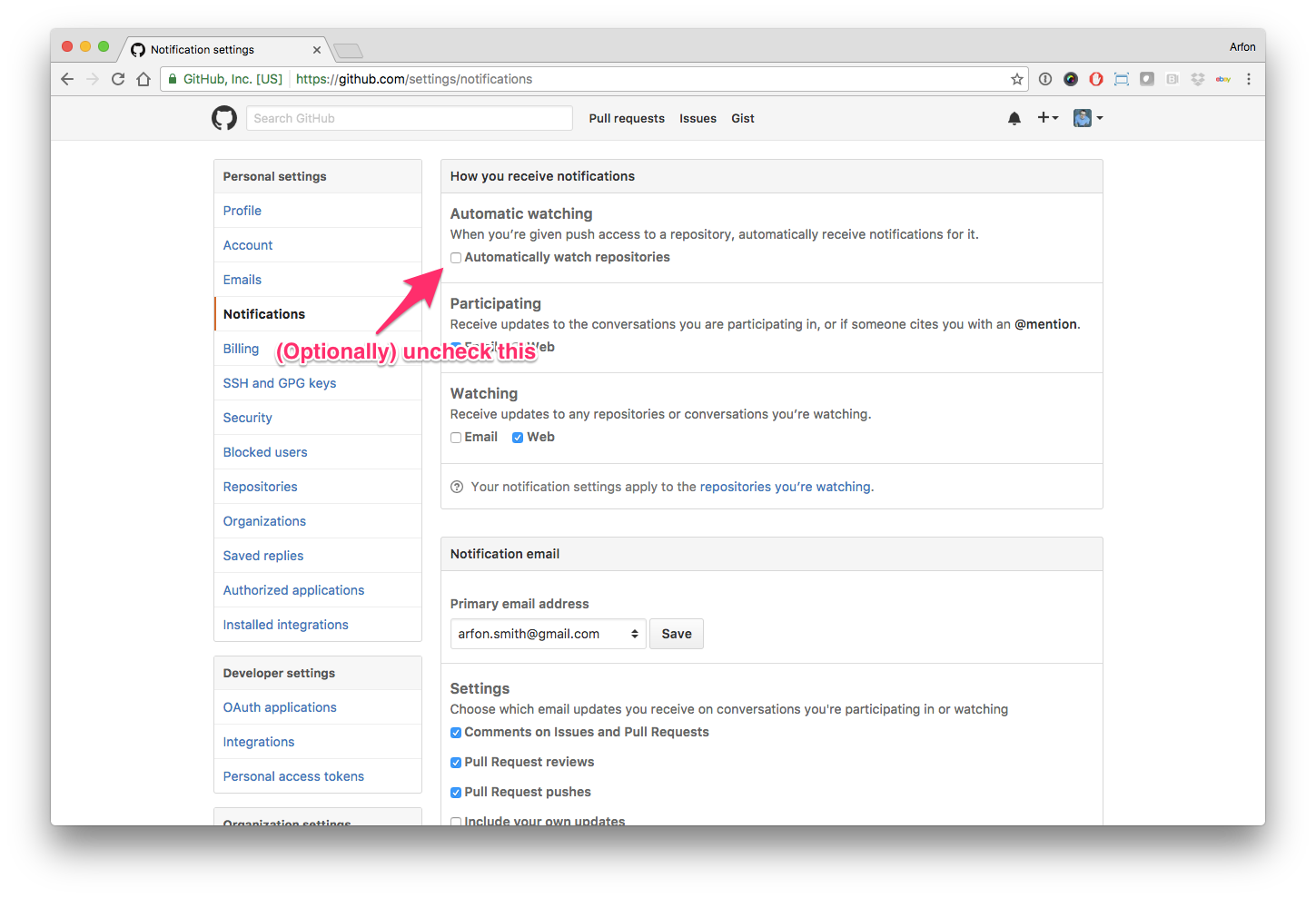

Hello human, I'm @whedon, a robot that can help you with some common editorial tasks. @pdebuyl, @markgalassi it looks like you're currently assigned to review this paper If you haven't already, you should seriously consider unsubscribing from GitHub notifications for this (https://github.com/openjournals/joss-reviews) repository. As a reviewer, you're probably currently watching this repository which means for GitHub's default behaviour you will receive notifications (emails) for all reviews To fix this do the following two things:

For a list of things I can do to help you, just type: For example, to regenerate the paper pdf after making changes in the paper's md or bib files, type: |

This comment has been minimized.

This comment has been minimized.

|

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

|

@drvinceknight I can't tick the boxes in the review checklist. From memory, I was able to edit the checklist directly in my earlier work with JOSS. Is there anything that I should do to get the authorization? |

This comment has been minimized.

This comment has been minimized.

That's strange, I've just checked and I can tick them so I'd assume you can because I believe you have all the necessary authorisation. Could you double check and also perhaps try on a different browser? |

This comment has been minimized.

This comment has been minimized.

|

My bad @drvinceknight I needed to renew my invitation to the reviewers group. I thought that it would not be needed for reviewers having already served. |

This comment has been minimized.

This comment has been minimized.

|

No problem, glad it's sorted |

This comment has been minimized.

This comment has been minimized.

|

Hi @SirSharpest , I had a first look at the program. I believe that documentation needs to be improved. I have FunctionalityInstallation: Does installation proceed as outlined in the documentation?

Functionality: Have the functional claims of the software been confirmed? I ran the Example notebook with success. I would like to have a least a few benchmark cases. DocumentationA statement of need: Do the authors clearly state what problems the software is designed to The problem is well stated. The target audience is not. Installation instructions: Is there a clearly-stated list of dependencies? Ideally these A requirements.txt file is missing. This is the most standard way to state dependencies for Example usage: Do the authors include examples of how to use the software (ideally to solve There is one example notebook. I suggest to improve the example by adding a textbook-type Functionality documentation: Is the core functionality of the software documented to a No. There are no docstring and no module-wide documentation page. The purpose of the Automated tests: Are there automated tests or manual steps described so that the There is limited testing. The test checks against an upper limit. An output time of zero Community guidelines: Are there clear guidelines for third parties wishing to 1) Contribute There is a contribution section in the readme. It only applies to new features and not to |

This comment has been minimized.

This comment has been minimized.

|

@pdebuyl Thank you for the detailed response, and for taking the time to review. I will address these issues and report back within the next week. |

This comment has been minimized.

This comment has been minimized.

|

I have just pushed a series of commits which I feel address most of the concerns: Functionality

Documentation

OtherI am happy to take on board any other issues that reviewers may have and will address them promptly also. Thank you again for your valuable input. |

This comment has been minimized.

This comment has been minimized.

|

I would also like to add, we are currently preparing to submit a paper which will cite this software, when it is published we will be able to provide additional real-world solutions. Until then, we are reluctant to add additional examples to prevent self-plagiarism or to remove novelty from our current research. |

This comment has been minimized.

This comment has been minimized.

|

@markgalassi apologies for the nudge, do you know when you might have a moment to carry out your review? |

This comment has been minimized.

This comment has been minimized.

|

@SirSharpest the doi for the JOSS paper should already be known. @drvinceknight is it ok to cite a JOSS paper before its acceptance? |

This comment has been minimized.

This comment has been minimized.

|

I don't think it would be appropriate to cite before acceptance, the DOI currently is inactive it seems. |

This comment has been minimized.

This comment has been minimized.

|

Yes the DOI is not yet an actual representation of the software as it might be modified still. I'm still waiting to hear back from @markgalassi but if I don't soon I will look for another reviewer. |

This comment has been minimized.

This comment has been minimized.

|

I just got back from travel and dove in to the paper. I am afraid that it is clearly not a candidate for anything yet. The simplest of things (following the procedure in README.md) fails: gives:

Clearly some variable p should be set, but for a minimal example which is the first thing shown this is not given! Please have the author double-check that the README.md works and then we can get back to work on evaluating this software. |

This comment has been minimized.

This comment has been minimized.

|

Let me also add a comment on the idea of notebooks as documentation: I know they are much in vogue, but there are people (like me) who insist on reproducible procedures for running python code as batch. This means that I am not in the habit of loading notebooks, and if I am presented with documentation as a notebook I want a command line invocation which will load all that. So pointing to a directory is not enough: please give a command to load that notebook up from a standard GNU/Linux system. |

This comment has been minimized.

This comment has been minimized.

|

(sorry: clicked wrong button and "close"ed the issue; I think that this reopens it) |

This comment has been minimized.

This comment has been minimized.

|

Thanks for your comments. I have added the required lines to the read me. Re Notebooks: For the case of being documentation and providing an example of how to use the software, then the integrated github viewer should be enough. This is presented as a method without results (beyond solving a known problem), so I wouldn't expect running it to be a complete necessity. Saying that however, most users will want to run notebooks differently and depending on your current setup you should be able to run Though, if you're wanting that command-line experience then running from bash (providing you installed the library) will give the same results, without plots as in the example notebook. |

This comment has been minimized.

This comment has been minimized.

|

Thank you for the update. I ticked many checks for the review already. Some items remain to

I filed pull requests for 7 and part of 6. |

This comment has been minimized.

This comment has been minimized.

|

Further testing: thanks @SirSharpest for addressing the issues I reported previously. Smaller ones now:

So p and dt are not defined. This is the usual problem with the notebook people trying to write reproducible and deliverable software and documentation :-) Otherwise my earlier problems seem to have been addressed and I am continuing with the checklist. |

This comment has been minimized.

This comment has been minimized.

|

Again, thank you for the comments and suggestions. Particularly towards testing and the errors in the examples, I was running them sequentially and so variables were carried over. I've made updates to the readme to remove the unnecessary text also. I've also updated several tests to use the numpy floating point closeness functions. Pull requests for minor fixes have been accepted. |

This comment has been minimized.

This comment has been minimized.

|

I have checked most of the boxes in the paper checklist. Here is a small suggested diff (below) so you don't assume that users are steeped in markdown. I would suggest that the "statement of need" in the readme.md file could do with a bit more, and that the paper put a math citation at the moment of stating "The mathematical models provided are simple and robust @iWouldCiteMySourceHere". I also have not yet checked the "state of the field" checkbox. In the paper you discuss the Schuss and Holcman papers, but no reference to existing software. You say yours is "novel" but you do not say that there is no "narrow escape" softare. And another nit: when I did a "git diff" after running your notebook procedure I saw a diff on a date in the notebook. Remember: notebooks are not reproducible and they are not entirely human source, so it is flawed to add them to a version control repo. I would not hold up the paper on this, but I would recommend that you put reproducibility and best VC practices front-and-center: commit a .py file, then provide a simple one-liner to load that into a notebook for notebook types. Other those simple suggestions I think you meet the criteria and I would quickly finish this off. And if I might add a personal note: your project is interesting! It made me want to read up on narrow escape and take an interest in the topic. |

This comment has been minimized.

This comment has been minimized.

|

@whedon set 10.5281/zenodo.3725946 as archive |

This comment has been minimized.

This comment has been minimized.

|

OK. 10.5281/zenodo.3725946 is the archive. |

This comment has been minimized.

This comment has been minimized.

|

@whedon accept |

This comment has been minimized.

This comment has been minimized.

|

This comment has been minimized.

This comment has been minimized.

|

This comment has been minimized.

This comment has been minimized.

|

Check final proof If the paper PDF and Crossref deposit XML look good in openjournals/joss-papers#1400, then you can now move forward with accepting the submission by compiling again with the flag |

This comment has been minimized.

This comment has been minimized.

|

Sorry for the delay @SirSharpest, I've recommended acceptance. Thank you @pdebuyl and @markgalassi for your time and effort reviewing this work: it's really appreciated. |

This comment has been minimized.

This comment has been minimized.

|

Thanks - I'll work on finishing this |

This comment has been minimized.

This comment has been minimized.

|

@SirSharpest - please update the archive's metadata so that the title matches the paper title |

This comment has been minimized.

This comment has been minimized.

|

Additionally, I've suggested some changes to the paper in SirSharpest/NarrowEscapeSimulator#6 |

This comment has been minimized.

This comment has been minimized.

|

And I've suggested some changes to the references in SirSharpest/NarrowEscapeSimulator#7 |

This comment has been minimized.

This comment has been minimized.

|

@whedon generate pdf |

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

|

Hi @danielskatz, thank you for the changes, I've reviewed and merged the changes, and just updated the zenodo title. |

This comment has been minimized.

This comment has been minimized.

|

@whedon accept |

This comment has been minimized.

This comment has been minimized.

|

This comment has been minimized.

This comment has been minimized.

|

This comment has been minimized.

This comment has been minimized.

|

Check final proof If the paper PDF and Crossref deposit XML look good in openjournals/joss-papers#1401, then you can now move forward with accepting the submission by compiling again with the flag |

This comment has been minimized.

This comment has been minimized.

|

@whedon accept deposit=true |

This comment has been minimized.

This comment has been minimized.

|

This comment has been minimized.

This comment has been minimized.

|

|

This comment has been minimized.

This comment has been minimized.

|

Here's what you must now do:

Any issues? notify your editorial technical team... |

This comment has been minimized.

This comment has been minimized.

|

Thanks to @pdebuyl & @markgalassi for reviewing, and @drvinceknight for editing! And congratulations to @SirSharpest and co-authors! |

This comment has been minimized.

This comment has been minimized.

|

If you would like to include a link to your paper from your README use the following code snippets: This is how it will look in your documentation: We need your help! Journal of Open Source Software is a community-run journal and relies upon volunteer effort. If you'd like to support us please consider doing either one (or both) of the the following:

|

Submitting author: @SirSharpest (Nathan Hughes)

Repository: https://github.com/SirSharpest/NarrowEscapeSimulator

Version: 1.0

Editor: @drvinceknight

Reviewer: @pdebuyl, @markgalassi

Archive: 10.5281/zenodo.3725946

Status

Status badge code:

Reviewers and authors:

Please avoid lengthy details of difficulties in the review thread. Instead, please create a new issue in the target repository and link to those issues (especially acceptance-blockers) by leaving comments in the review thread below. (For completists: if the target issue tracker is also on GitHub, linking the review thread in the issue or vice versa will create corresponding breadcrumb trails in the link target.)

Reviewer instructions & questions

@pdebuyl & @markgalassi, please carry out your review in this issue by updating the checklist below. If you cannot edit the checklist please:

The reviewer guidelines are available here: https://joss.readthedocs.io/en/latest/reviewer_guidelines.html. Any questions/concerns please let @drvinceknight know.

Review checklist for @pdebuyl

Conflict of interest

Code of Conduct

General checks

Functionality

Documentation

Software paper

Review checklist for @markgalassi

Conflict of interest

Code of Conduct

General checks

Functionality

Documentation

Software paper