Join GitHub today

GitHub is home to over 40 million developers working together to host and review code, manage projects, and build software together.

Sign up[REVIEW]: mimosa: A Modern Graphical User Interface for 2-level Mixed Models #2116

Comments

This comment has been minimized.

This comment has been minimized.

|

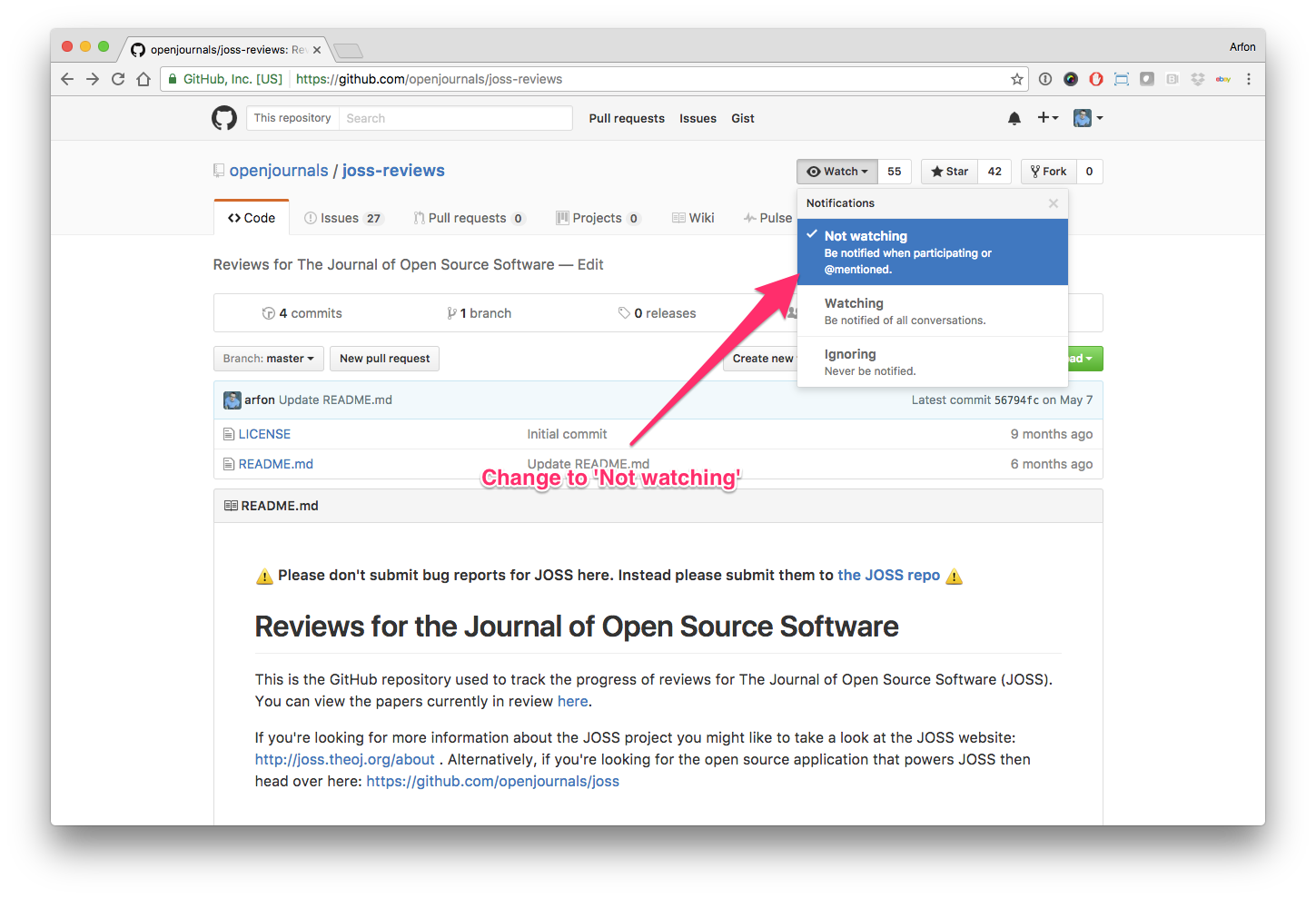

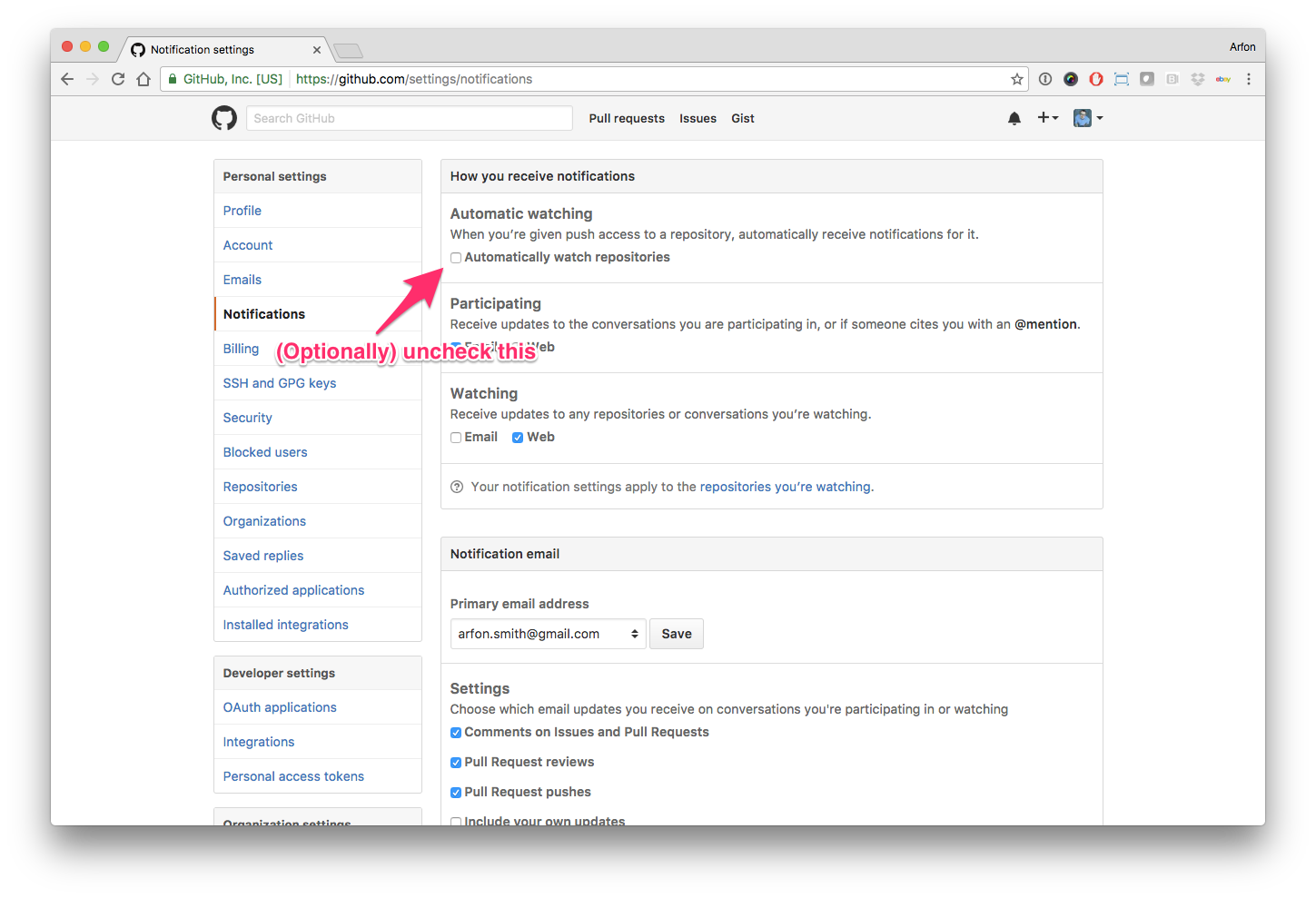

Hello human, I'm @whedon, a robot that can help you with some common editorial tasks. @strengejacke, @aj2duncan it looks like you're currently assigned to review this paper If you haven't already, you should seriously consider unsubscribing from GitHub notifications for this (https://github.com/openjournals/joss-reviews) repository. As a reviewer, you're probably currently watching this repository which means for GitHub's default behaviour you will receive notifications (emails) for all reviews To fix this do the following two things:

For a list of things I can do to help you, just type: For example, to regenerate the paper pdf after making changes in the paper's md or bib files, type: |

This comment has been minimized.

This comment has been minimized.

|

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

|

@strengejacke @aj2duncan, do let me know if you have any questions as you review the submission :) |

This comment has been minimized.

This comment has been minimized.

|

Hi @johannes-titz, I've got a query for you if that's ok. In terms of contributions you list a contributer on mimosa.org and a second user has contributed 71 commits to the repo. I'm not sure if this is the same person or different people, but perhaps you need to consider the authorship of the submission? Thanks, Andrew |

This comment has been minimized.

This comment has been minimized.

|

@aj2duncan Thank you for the query! The contributor mentioned on mimosa.icu is Maria Reichert. If you run gitinspector -f R, you will see that she made one commit (the initial one), which makes about 6% of all changes. We planned to work on mimosa together and she wrote a first scaffold, but was too busy to continuously work on the project. She knows that I submitted the software to JOSS and is fine with not appearing as a main author. But I will add her as a contributor to the README.md and acknowledge her contribution--I just really forgot to do that before submitting. The other user with 71 commits is actually just another account of myself (gitfortat). To my knowledge I cannot merge both accounts in such a way that these commits would belong to my new account. But the output of gitinspector at least shows my first name. The associated e-mail address for gitfortat is johannes.titz@gmail.com, to give an example here is one commit: https://github.com/johannes-titz/mimosa/commit/a716de17eb4c0a371cc5fd809036dac13fc0a0a6.patch If you remove the ".patch" in the url you will see that this is a commit by gitfortat. Sorry for the confusion! |

This comment has been minimized.

This comment has been minimized.

|

Thanks @johannes-titz, |

This comment has been minimized.

This comment has been minimized.

|

Just read your comment, which makes my first part of my answer redundant. |

This comment has been minimized.

This comment has been minimized.

|

Hi @johannes-titz, Thanks for the quick reply. That all seems fine and I can understand the dual accounts - sorry I should have dug a little deeper to see the email addresses! I'll try to complete the review this evening. Andrew |

This comment has been minimized.

This comment has been minimized.

|

@aj2duncan No problem. This is my mistake, sorry for not stating it earlier! I will add the information on the contributing author asap. @strengejacke Thank you very much! I am actually not a big fan of centering since the data I usually analyze has a meaningful interpretation of the zero point. So far I thought centering is rather optional and more of a theoretical problem (what should zero represent?) than a statistical one. I was not familiar with the heterogeneity bias, so I will definitely read the suggested literature and consider adding a "demeaning" option. I assume this is not the right place to discuss it, but I am curious: Will demeaning only affect p-values and confidence intervals (e.g. R² remains the same)? Could the problem alternatively be handled by bootstrapping (if it only affects the error terms)? What happens with variables that are on a ratio scale? From a theoretical point of view, I would not suggest transforming them (except for a multiplication by a constant). Since you do not insist on adding a demeaning option in this review, I would like to consider it for a later release. But thank you very much for pointing me to this problem! I am looking forward to read about it in more detail. |

This comment has been minimized.

This comment has been minimized.

|

Hi @johannes-titz, As @strengejacke says, the paper is well written and everything is well documented. The app is intuitive and it is very nice that it is hosted online for users. The only minor comments I would make are related to Shiny and I have no further comments about the paper. In terms of the app I can see two small things to consider for improvements. First is to consider adding a progress bar to the model calculation, even in the example you can get the app to hang slightly if you click the wrong variable. Adding a progress bar would let the user know it is just working away in the background. Second is a recommendation to consider whether you want the app to recalculate the model anytime a user changes an input. You may wish to isolate some (or all) inputs and let the user only rerun the model when they've finished. You will know the intended audience far better than I and can make an informed decision whether either of these suggestions merit the time needed to implement them. |

This comment has been minimized.

This comment has been minimized.

|

That's a good recommendation, indeed. I didn't run into performance problems, so I didn't realize that issue - but having larger data sets, it might make more sense to select your input variables first, then click on an "update-button" or similar, which might be useful in particular for very large data sets. |

This comment has been minimized.

This comment has been minimized.

|

@aj2duncan, @strengejacke Thank you for very much for the feedback! I really like the idea of having a progress bar. Unfortunately this is not a trivial think to do (e.g. see discussion here lme4/lme4#482). It would be easier to show the iteration step and the optimization criterion, which is sort of a progress indicator (e.g. one can anticipate when it converges). But even for this I first need to check how I can implement this. Isolating the inputs is also a good idea, although in most cases I prefer the interactivity. Maybe I can add an option to isolate/not isolate the inputs. I will try it out and give you an update. |

This comment has been minimized.

This comment has been minimized.

|

Dear authors and reviewers We wanted to notify you that in light of the current COVID-19 pandemic, JOSS has decided to suspend submission of new manuscripts and to handle existing manuscripts (such as this one) on a "best efforts basis". We understand that you may need to attend to more pressing issues than completing a review or updating a repository in response to a review. If this is the case, a quick note indicating that you need to put a "pause" on your involvement with a review would be appreciated but is not required. Thanks in advance for your understanding. Arfon Smith, Editor in Chief, on behalf of the JOSS editorial team. |

This comment has been minimized.

This comment has been minimized.

|

@aj2duncan, @strengejacke I had some time to check out how to implement the suggested features and would like to give you an update: First of all, a progress bar is quite hard to implement for two reasons: (1) It is not easy to predict how many iteration steps are necessary for convergence and (2) At the moment I do not know how to access the iteration steps without “hacking” lme4 (I asked a question on SO, so maybe I will find away in the future). I thought of just displaying the convergence criterion, but because of point (2) (and shiny limitations), this is also not possible at the moment. Unfortunately I cannot invest much more time into this as I already spend several days to research and try out some options. Instead, I simply added a “spinner“ and a message that the model is estimated. I believe this is sufficient since all of the plausible models I fitted finished in a few seconds. The only problem arose when I used “student” as an independent variable for the example data set. The variable has 650 levels and setting up the model takes pretty much forever, while estimating goes quickly. It does not make sense to fit a model with a factor that has so many levels. Thus, I decided to exclude factor variables with more than 10 levels. I think this is reasonable as it avoids fitting meaningless models that take very long. Furthermore, if one still wants to do this, one can create dummy variables manually before uploading the data to mimosa. Regarding the non-reactive (isolated) inputs, I simply included a checkbox that switches between isolated and non-isolated inputs. If the inputs are isolated, a button to estimate the model is displayed. Depending on user feedback, I might change the default to isolated inputs in the future. For now I would like to keep it reactive when mimosa starts. The updated version is currently available under the branch “joss_review”; to install in R: The updated app is also available under www.mimosa.icu/joss_review and for the example data set: https://www.mimosa.icu/joss_review_example. If you are happy with the changes, I will merge them into master. I hope everything works as expected. By the way, I also updated the acknowledgements and added the contributing author to the DESCRIPTION file (this is also already on master). @cMadan If it is ok I would like to continue the review and not pause it. |

This comment has been minimized.

This comment has been minimized.

|

@johannes-titz I really like those changes that you've made. The spinner that you've added is just exactly the thing that I was thinking about, rather than something more accurate using the convergence of lme4. I also like the reactive vs non-reactive mode. Very simple to use. Changing between the two settings did also show/hide the contributions, which you may want to tweak. Otherwise, really like the changes. @cMadan from my point of view - very happy for this review to be concluded. |

This comment has been minimized.

This comment has been minimized.

|

@aj2duncan Thank you very much! Regarding the problem with showing/hiding the contributions, I am not able to reproduce it. When exactly does it happen? Which browser are you using? |

This comment has been minimized.

This comment has been minimized.

|

@cMadan @johannes-titz From my perspective, all issues have been addressed, as well. |

This comment has been minimized.

This comment has been minimized.

|

@johannes-titz I was using your example app at the joss-review link above on Chrome but now I can't reproduce it either - so nevermind not sure what was happening. |

This comment has been minimized.

This comment has been minimized.

|

@strengejacke @aj2duncan, thank you both for your thorough reviews of this submission! @johannes-titz, next I will do some final checks. |

This comment has been minimized.

This comment has been minimized.

|

@aj2duncan Ok. I guess it can happen if there are many variables and the footer is moved down because of this, but I am not really sure. @cMadan Thank you! If I can help somehow, let me know. The master is now up to date. If you think copy-editing for the paper is necessary (as you can see I am not a native speaker), I will look for someone. |

This comment has been minimized.

This comment has been minimized.

|

@whedon generate pdf |

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

|

@cMadan Just to keep you up to date: I sent the paper to a copy-editor and she found a couple of small grammar mistakes and made some minor improvements in word choice. These changes are already included in the master (see: johannes-titz/mimosa@a80736f?short_path=fb6416b). I believe this is now good to go. @whedon generate pdf |

This comment has been minimized.

This comment has been minimized.

|

@whedon generate pdf |

Submitting author: @johannes-titz (Johannes Titz)

Repository: https://github.com/johannes-titz/mimosa

Version: v0.2.0

Editor: @cMadan

Reviewer: @strengejacke, @aj2duncan

Archive: Pending

Status

Status badge code:

Reviewers and authors:

Please avoid lengthy details of difficulties in the review thread. Instead, please create a new issue in the target repository and link to those issues (especially acceptance-blockers) by leaving comments in the review thread below. (For completists: if the target issue tracker is also on GitHub, linking the review thread in the issue or vice versa will create corresponding breadcrumb trails in the link target.)

Reviewer instructions & questions

@strengejacke & @aj2duncan, please carry out your review in this issue by updating the checklist below. If you cannot edit the checklist please:

The reviewer guidelines are available here: https://joss.readthedocs.io/en/latest/reviewer_guidelines.html. Any questions/concerns please let @cMadan know.

Review checklist for @strengejacke

Conflict of interest

Code of Conduct

General checks

Functionality

Documentation

Software paper

Review checklist for @aj2duncan

Conflict of interest

Code of Conduct

General checks

Functionality

Documentation

Software paper