Join GitHub today

GitHub is home to over 36 million developers working together to host and review code, manage projects, and build software together.

Sign up[REVIEW]: ReinforcementLearning: A Package to Perform Model-Free Reinforcement Learning in R #1087

Comments

whedon

assigned

arfon

whedon

assigned

arfon

Nov 19, 2018

whedon

added

the

review

label

whedon

added

the

review

label

Nov 19, 2018

whedon

referenced this issue

whedon

referenced this issue

Nov 19, 2018

Closed

[PRE REVIEW]: ReinforcementLearning: A Package to Perform Model-Free Reinforcement Learning in R #1045

This comment has been minimized.

This comment has been minimized.

|

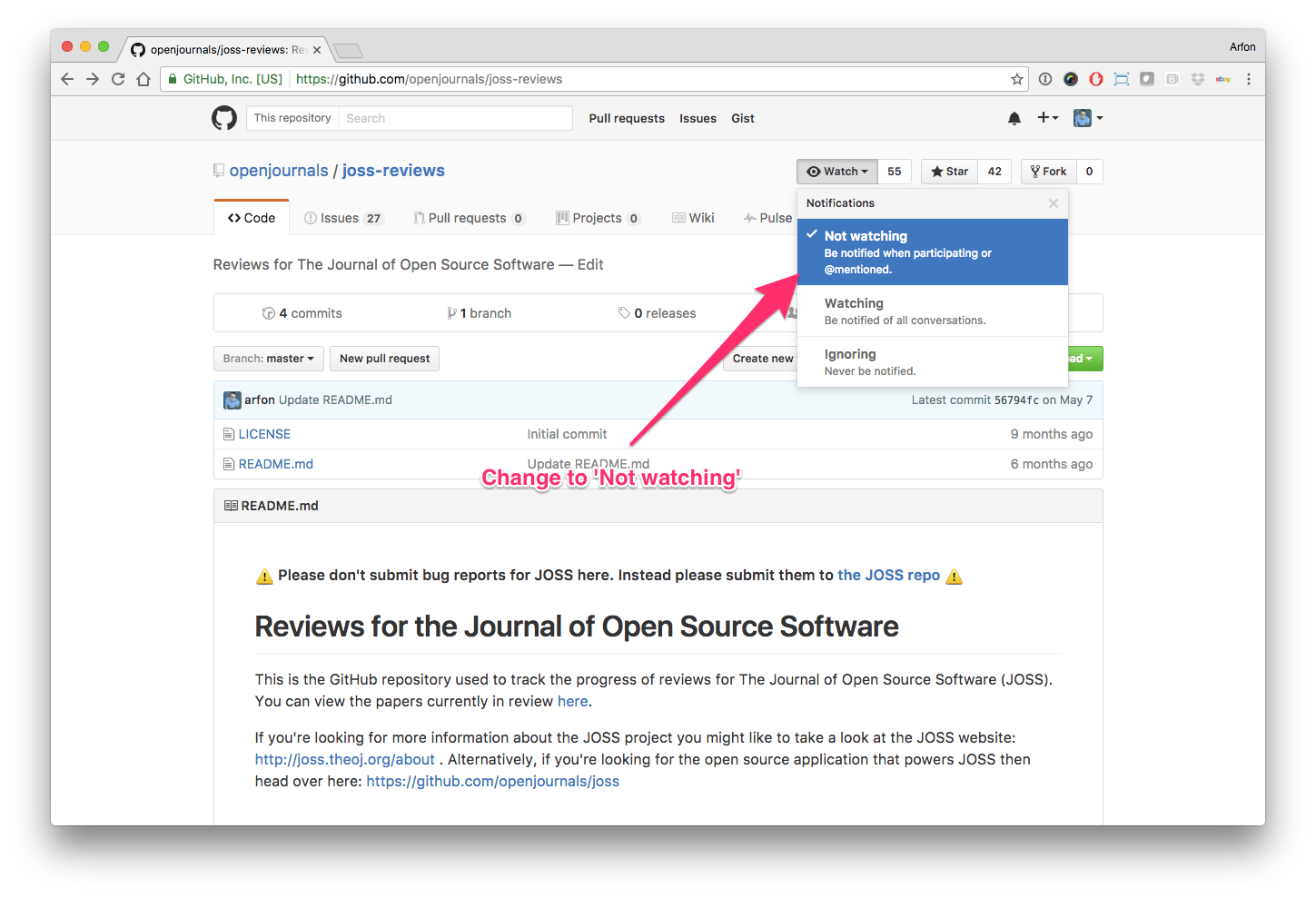

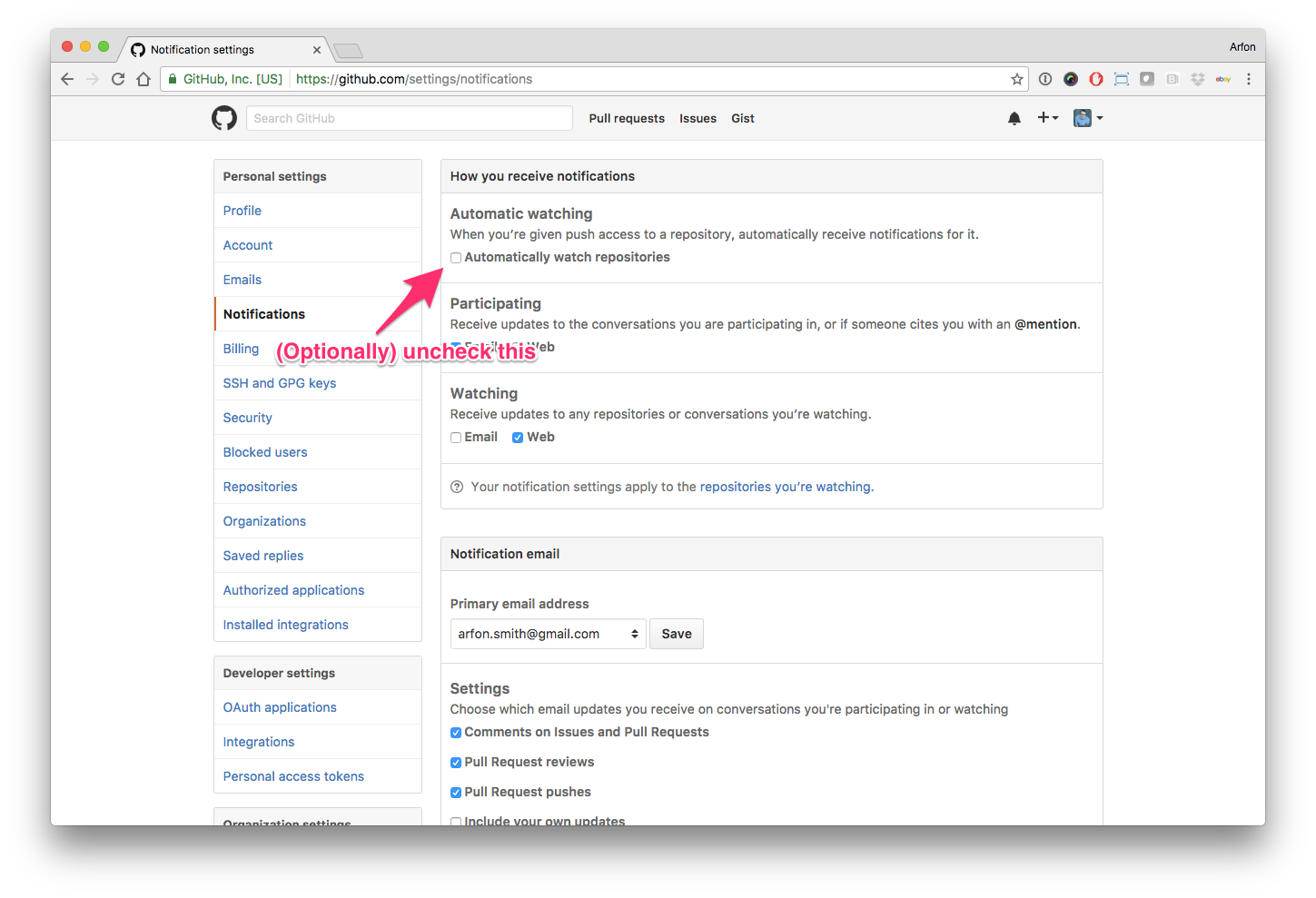

Hello human, I'm @whedon, a robot that can help you with some common editorial tasks. @terrytangyuan, it looks like you're currently assigned as the reviewer for this paper If you haven't already, you should seriously consider unsubscribing from GitHub notifications for this (https://github.com/openjournals/joss-reviews) repository. As a reviewer, you're probably currently watching this repository which means for GitHub's default behaviour you will receive notifications (emails) for all reviews To fix this do the following two things:

For a list of things I can do to help you, just type: |

This comment has been minimized.

This comment has been minimized.

|

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

|

@nproellochs Could you clarify the differences between your package and reinforcelearn? Also, have you done any benchmarking or used the package in certain applications yet as I doubt that this package is suitable and performant enough for practical use cases given it's implemented purely in R? |

This comment has been minimized.

This comment has been minimized.

nproellochs

commented

Dec 1, 2018

|

@terrytangyuan The key differences between our package and the reinforcelearn package are as follows:

We also tested the suitability of the package in multiple application scenarios. For example, our paper "Negation scope detection in sentiment analysis: Decision support for news-driven trading" makes use of the package to learn negations based on reinforcement learning (the paper is available from https://www.sciencedirect.com/science/article/pii/S0167923616300823). |

This comment has been minimized.

This comment has been minimized.

|

@nproellochs By "performance", you meant the statistical performance, right? What about computational performance and benchmarks? RL is mostly popular in large scale applications but your package doesn't seem to support that. |

This comment has been minimized.

This comment has been minimized.

nproellochs

commented

Dec 3, 2018

|

@terrytangyuan In typical application scenarios, batch reinforcement learning also speeds up computational performance as it mitigates the ‘exploration overhead’ problem in pure online learning. In combination with experience replay, it speeds up convergence by collecting and replaying observed state transitions repeatedly to the agent as if they were new observations collected while interacting with the system. An overview of the method can be found in our paper (https://arxiv.org/pdf/1810.00240.pdf). Nonetheless, due to the fact that the package is written purely in R, I agree that its applicability to very large scale problems (such as applications in computer vision) is limited. |

This comment has been minimized.

This comment has been minimized.

|

|

This comment has been minimized.

This comment has been minimized.

|

@nproellochs Thanks for the clarification. I understand your point but I don't see how useful and practical this software can be if it cannot be applied to the most popular RL scenarios (mostly due to computational performance overhead). I am uncertain how valuable this is to publish in JOSS. @arfon How do we go from here? Any thoughts @kbenoit? |

This comment has been minimized.

This comment has been minimized.

|

Hi - Sorry for the delay. I'm currently on holidays but back to work on Jan 23 and happy to complete my review then. In the meantime: On the performance issue, this is really a question for the package and its authors to demonstrate. If there is a vignette or demonstration in the JOSS paper showing that despite being all R, that it can be useful for some applications, then I consider this a satisfactory answer to the question. Alternatively, the case could be made that it's a demonstration or learning package for RL for R users. Only if it's impractical for nearly any application because of performance issues would it be inappropriate as an R package or for JOSS. |

This comment has been minimized.

This comment has been minimized.

@terrytangyuan - this is a fair point. We have a sister journal JOSE which may be a better fit for this package if @kbenoit is of the same opinion as @terrytangyuan here.

That would be great. Thanks! |

This comment has been minimized.

This comment has been minimized.

|

Friendly reminder to pick up this review sometime soon please @kbenoit |

This comment has been minimized.

This comment has been minimized.

|

Sorry for the delay guys, here are my comments. All of my suggestions are below but on the JOSE option above, I think it has to do below with the purpose of the package and the need it addresses, which I think needs additional clarification before we can answer this definitively. Do I think the package should be rejected from JOSS because it cannot solve large-scale computer vision problems? No, not unless that is what it claims to solve. So for me, a key unanswered question is exactly what need it claims to meet. See more below. Checklist issues

Not exactly, as the GitHub repo has not tagged releases. Suggest you use this very useful facility. https://help.github.com/articles/creating-releases/. I can verify that the current master branch on the GitHub repo matches the submitted and the CRAN version.

I find this uncertain, since in the vignette, there is no actual execution of the code, and no interpretation or discussion of the results or the format in which they are delivered. How I am to understand the output of > head(policy(model))

.XXBB..XB XXBB.B.X. .XBB..BXX BXX...B.. ..XB..... XBXBXB...

"c1" "c5" "c5" "c4" "c5" "c9"

None exist in the repo or in the

None of the three checklist items are met in the current version of the paper. See below for my comments on the statement of need and the purpose of the package. Core issues relating to package's purposeIt's not entirely clear what the core purpose of the package is, given the performance limitations acknowledged above. Either this is a practical solution for applying reinforcement learning to problems that people need to solve, in which case there needs to be a discussion o of its performance limitations and some comparison to other possible solutions, in the paper and in my opinion, in the package vignette and/or repository README. The current statement of purpose (in the paper) suggests that the package exists to provide an R package for model-free learning, implying that its purpose is to provide a practical tool for applying this model to a broad class of problems. If so, then some discussion of performance is needed. Or, this is a purely R-based implementation of reinforcement learning that offers a way to test or take apart this class of machine learning model for primarily educational purposes. If this is the case, then JOSE is more appropriate. Either way, but especially in the second scenario, more complete documenation is needed, since any user is going to need to:

The man pages cite Sutton and Barto (1998). Why not implement one of the examples from that text using the package, as a vignette? Especially if this is primarily a learning tool, this would be really useful, but even if designed as a problem-solving tool, it will greatly help users connect the tool to a textbook that more fully explains parameter choices and provides further examples. Some options are never explained at all, such as Minor issuesNot every manual entry has examples, and often has very little discussion. Why not flesh this out, especially if the purpose is educational? Admittedly, I am a bit obsessed with naming, but I find the function names unintuitive and inconsistent, either non-descriptive (e.g. The R code is nicely written and very readable - so if the purpose is educational then it will serve that well, for those looking at source code. Although: please add a space after R CMD CHECK issuesOn the a full check, I am seeing:

|

This comment has been minimized.

This comment has been minimized.

|

|

This comment has been minimized.

This comment has been minimized.

|

@nproellochs - could you give us an update on your progress? |

This comment has been minimized.

This comment has been minimized.

|

Sorry to be slow on this, review by early next week at the latest. Been snowed under with something else but it's done now. |

This comment has been minimized.

This comment has been minimized.

nproellochs

commented

Apr 11, 2019

|

@arfon Sorry for the delay. I will give an update on this ASAP. Thank you @kbenoit & @terrytangyuan for the review and the valuable feedback. |

This comment has been minimized.

This comment has been minimized.

|

This comment has been minimized.

This comment has been minimized.

|

@arfon My feedback and opinion still remain unchanged for the reasons I mentioned earlier. I'd recommend @nproellochs submitting this article to other journals, e.g. JOSE mentioned above. |

This comment has been minimized.

This comment has been minimized.

Understood. Thanks @terrytangyuan. |

whedon

assigned

kbenoit and

terrytangyuan

whedon

assigned

kbenoit and

terrytangyuan

May 6, 2019

This comment has been minimized.

This comment has been minimized.

danielskatz

commented

May 20, 2019

|

|

This comment has been minimized.

This comment has been minimized.

nproellochs

commented

May 20, 2019

|

@arfon I am planning to incorporate the feedback next weekend. Is there a deadline for the revision? |

This comment has been minimized.

This comment has been minimized.

No, the timing is up to you. Please make sure though in your revisions you directly address the feedback from @kbenoit. Specifically:

In your response here I think you need to make a strong case for this being a JOSS submission rather than a JOSE one, especially given both reviewers have the same concern here. |

whedon commentedNov 19, 2018

•

edited by kbenoit

Submitting author: @nproellochs (Nicolas Proellochs)

Repository: https://github.com/nproellochs/ReinforcementLearning

Version: v1.0.2

Editor: @arfon

Reviewer: @terrytangyuan, @kbenoit

Archive: Pending

Status

Status badge code:

Reviewers and authors:

Please avoid lengthy details of difficulties in the review thread. Instead, please create a new issue in the target repository and link to those issues (especially acceptance-blockers) in the review thread below. (For completists: if the target issue tracker is also on GitHub, linking the review thread in the issue or vice versa will create corresponding breadcrumb trails in the link target.)

Reviewer instructions & questions

@terrytangyuan & @kbenoit, please carry out your review in this issue by updating the checklist below. If you cannot edit the checklist please:

The reviewer guidelines are available here: https://joss.theoj.org/about#reviewer_guidelines. Any questions/concerns please let @arfon know.

Review checklist for @terrytangyuan

Conflict of interest

Code of Conduct

General checks

Functionality

Documentation

Software paper

paper.mdfile include a list of authors with their affiliations?Review checklist for @kbenoit

Conflict of interest

Code of Conduct

General checks

Functionality

Documentation

Software paper

paper.mdfile include a list of authors with their affiliations?