Join GitHub today

GitHub is home to over 40 million developers working together to host and review code, manage projects, and build software together.

Sign upAdd method for determining binary file extension #349

Conversation

jrwiebe

added some commits

Aug 13, 2019

jrwiebe

requested a review

from ruebot

jrwiebe

requested a review

from ruebot

Aug 17, 2019

This comment has been minimized.

This comment has been minimized.

|

If we deprecated This isn't a big deal. I like removing the robots check to make the code more elegant. And theoretically a URL ending with "robots.txt" could actually be an image – though this is unlikely. |

This comment has been minimized.

This comment has been minimized.

codecov

bot

commented

Aug 17, 2019

•

Codecov Report

@@ Coverage Diff @@

## master #349 +/- ##

==========================================

+ Coverage 71.7% 75.38% +3.67%

==========================================

Files 38 39 +1

Lines 1428 1373 -55

Branches 331 265 -66

==========================================

+ Hits 1024 1035 +11

+ Misses 245 221 -24

+ Partials 159 117 -42 |

This comment has been minimized.

This comment has been minimized.

|

@jrwiebe go for it! It makes sense to have a single |

This comment has been minimized.

This comment has been minimized.

Sure! Let me know what you want, and I'll can get add a new test WARC or replace one or a couple. |

This comment has been minimized.

This comment has been minimized.

|

@ruebot How about Edited: no need for something like |

This comment has been minimized.

This comment has been minimized.

|

@jrwiebe you want |

This comment has been minimized.

This comment has been minimized.

|

@ruebot Yes |

This comment has been minimized.

This comment has been minimized.

|

@jrwiebe https://www.dropbox.com/s/tdegsqp4fjqcx8j/example.media.warc.gz -- that should do it. webrecorder.io did just displayed all the binary characters when I hit the gif with no extension. We'll see what happens there WARC record-wise. ...it should have all the existing files in it too. |

This comment has been minimized.

This comment has been minimized.

|

@ruebot Would you mind replacing |

This comment has been minimized.

This comment has been minimized.

|

...let's see what happens with this one: https://www.dropbox.com/s/lovjzrm9wkauzgc/temp-20190817230619.warc.gz |

This comment has been minimized.

This comment has been minimized.

|

I got a too many files open error on the most recent commit when I hit image extraction. Prior to that, I tested on 2c26dd0 and everything worked fine. test script |

This comment has been minimized.

This comment has been minimized.

|

I didn't get that in my test, but my WARCs might contain fewer files. Try throwing a |

This comment has been minimized.

This comment has been minimized.

jrwiebe

added some commits

Aug 18, 2019

This comment has been minimized.

This comment has been minimized.

|

@jrwiebe I can fix the tests and push up when I get some time tomorrow if you. I just have to tweak the layout. If you're cool with that, once it turns green, I can squash and merge. |

This comment has been minimized.

This comment has been minimized.

|

@ruebot I fixed the tests, but if you want to tweak them that's fine. I think we're ready to go. |

jrwiebe commentedAug 17, 2019

GitHub issue(s):

What does this Pull Request do?

This PR implements the strategy described in the discussion of the above issue to get an extension for a file described by a URL and a MIME type. It creates a

GetExtensionMimeobject in the matchbox.This PR also removes most of the filtering by URL from the image, audio, video, presentation, spreadsheet, and word processor document extraction methods, since these were returning false positives. (CSV and TSV files are a special case, since Tika detects them as "text/plain" based on content.)

Finally, I have inserted

toLowerCaseinto thegetUrl.endsWith()filter tests, which could possibly bring in some more CSV and TSV filesHow should this be tested?

Test by running something like the following script first on a build of

master, then modify the output path and do the same on a build ofget-extension. Depending on your input there may or may not be a difference between the sets of files that are extracted. If there is, the second run should have fewer files of all types except images, due to misidentification of files by URL in the first run (i.e., false positives), and they should all have extensions. BecauseextractImageDetailsDFwas using the MIME type stored in the archive record and not the detected version, the first run might produce fewer image files than the second (i.e.,masterwas producing false negatives); themasterversion's reliance on the URL extension could also produce false positives. Because we(Tip: You can use the MD5 hash in the filenames to identify files with the same content.)

Here are my results. For the document, spreadsheet, and presentation files I confirmed that files missing from the second run were files that had been misidentified in the first run (master branch).

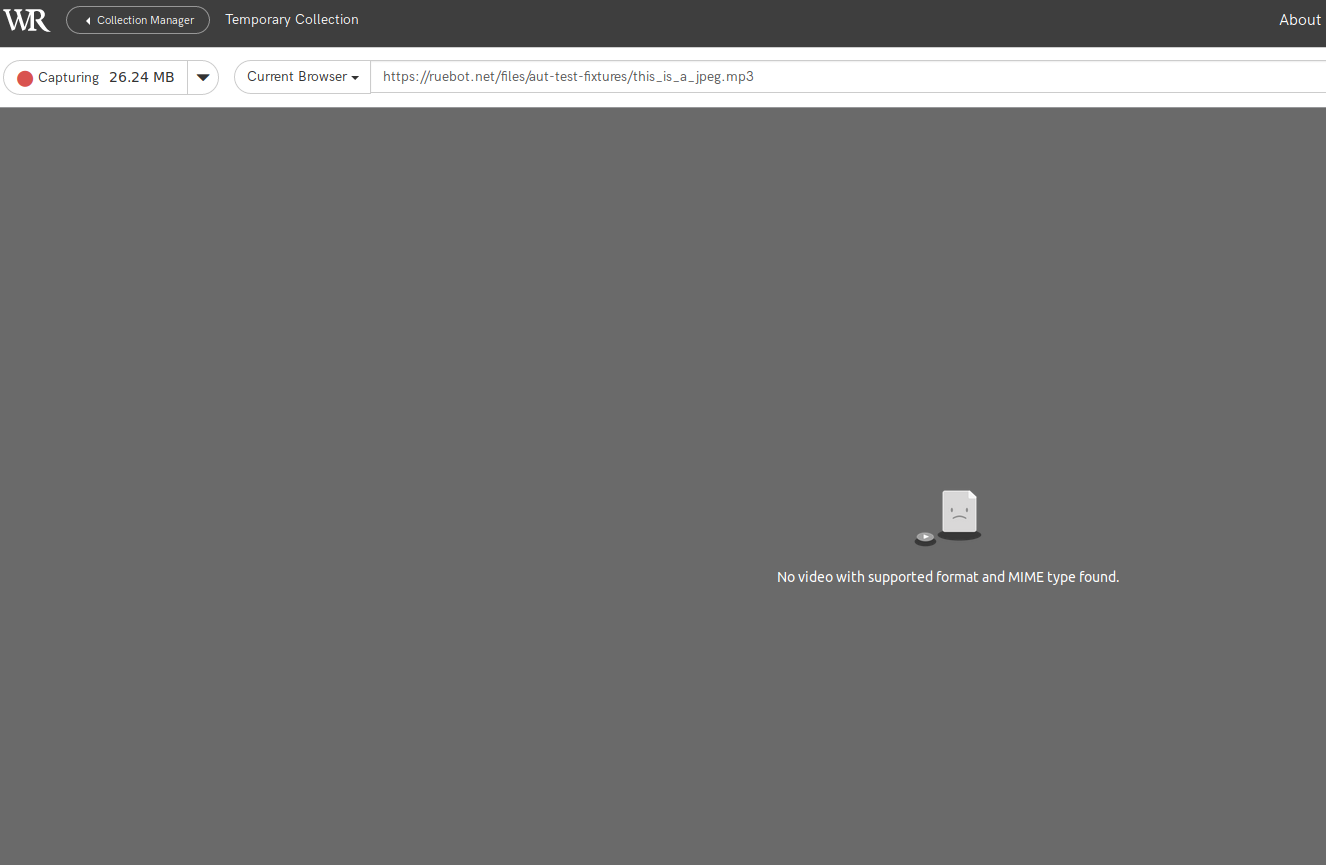

Admittedly mine wasn't a complete test, since it doesn't show how

GetExtensionMimewould handle a file with the wrong extension in the URL. @ruebot, since the tests you created recently reference actual files on your web server, maybe you could add a couple? To demonstrate how the method does work, see:(

mpgamight) be unexpected here, but it is the first in the list of extensions associated with the MIME typeaudio/mpeg. Oh, well.)Additional notes

Part of the reason for false positives was that blocks like this should be ANDs, not ORs.

I left the

!r.getUrl.endsWith("robots.txt")condition inkeepImages, because removing it caused a few files to be found that looked like GIFs and JPEGs, but which were named/robots.txtand which were incomplete, causingsaveImageToDiskto fail with ajava.io.EOFException.I don't know if we need to be using

saveImageToDisk. We could simply usesaveToDisk. This PR adds the "extension" (and "file") field to the DF returned byextractImageDetailsDF, so using the later save method is now an option.